Businesses around the world today are thinking about building LLMs as these advanced models have completely changed the way natural language processing operates. In fact, they help machines understand and generate human-like text with remarkable accuracy. So, it is certainly one of the biggest achievements of developing generative AI that has helped businesses across all sectors in making the most out of it.

LLMs are already being acknowledged by companies as tools for improving user experience, automating customer support, and even content generation. Whether they are a committed major language model development corporation or a digital startup exploring artificial intelligence, every organization may start LLM development with the correct attitude.

Here we are going to discuss the process of developing large language models in detail to help you make the right decision in terms of initial conception to deployment and monitoring.

Table of Contents

Brief Overview of Large Language Models

Advanced algorithms meant to process and produce human language are large language models. Building LLMs involves using advanced technologies like Transformers to grasp context, meaning, and grammar, drawing on vast volumes of text data. Fundamentally, LLMs create cohesive and contextually appropriate language by forecasting the next word in a series.

Key Stats about the Usage of LLMs

- By 2025, the worldwide market for LLMs is expected to be $20 billion.

- More than 70% of companies say they use LLMs in some way for customer service automation.

- Improved automation has helped LLM-using companies to cut operational expenses by 30%.

- Compared to conventional techniques, LLMs can produce marketing content, reports, and articles 50% faster.

- Of human reps, 60% of users like engaging with LLM-driven AI chatbots.

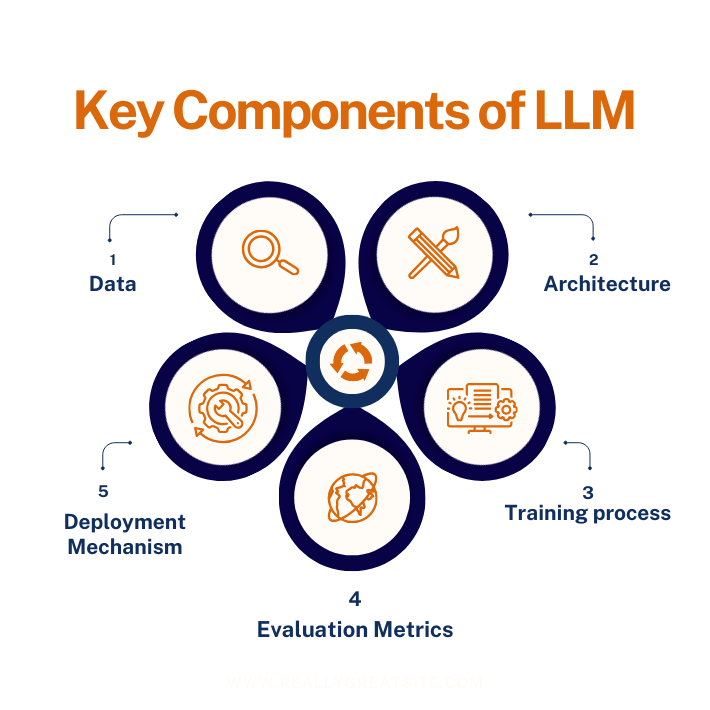

Key Components of Large Language Models

Understanding large language model creation requires an awareness of the fundamental elements supporting their efficacy:

Data

The performance of large language models (LLMs) depends first on the quality and amount of training data. To properly learn and identify linguistic patterns, subtleties, and contextual links, these models need large data sets. To guarantee the model can generalize well across several settings, high-quality data should be varied, including a spectrum of text kinds, styles, and genres. Preprocessing is also crucial to clean the data by eliminating anomalies and extraneous material. Data is a key factor in LLM development since the richness of the information directly affects the model’s capacity to produce consistent and contextually appropriate text.

Architecture

Most large language models are based on the Transformer architecture, which is famed for its efficiency in handling sequences of data. By means of self-attention and feed-forward neural networks, the Transformer examines word associations in a sentence, hence allowing the model to efficiently capture context and long-range dependencies. Compared to conventional recurrent models, this design enables parallel processing, hence greatly accelerating training durations. The Transformer architecture’s adaptability also allows modification so that developers may customize it for certain uses and needs, hence improving the general performance and features of the model.

Training Process

Developing large language models depends on the training process, which entails fine-tuning the model’s weights to lower prediction errors. Usually using methods like backpropagation, this approach has the model learn from its errors by changing weights depending on the loss computed from its predictions. Especially with big datasets, training calls for significant computer resources and time. Hyperparameter tuning, such as changing learning rates and batch sizes, is also essential for maximizing the training process. A well-executed training step guarantees that the model learns efficiently, hence producing a strong and dependable language model.

Evaluation Metrics

To guarantee huge language models fulfill the intended goals, one must evaluate their performance. Important evaluation criteria are accuracy, which evaluates the percentage of correct predictions, and perplexity, which gauges how well the model forecasts a sample. Often used to assess particular activities like categorization or translation are other measures including F1 score and BLEU score. Examining these measures on validation datasets helps engineers to find strengths and shortcomings in the model. This evaluation method guides required changes and iterations, hence producing a more dependable and efficient language model.

Deployment Mechanisms

Ensuring that big language models can serve users in real-time depends on efficient deployment procedures. This means including the learned model into a production setting where it can effortlessly manage user queries and interactions. Deployment plans could call for on-premises solutions for more control or cloud services for scalability. Establishing strong Application Programming Interfaces (APIs) also helps the model interact with other applications. Post-deployment monitoring of system performance is also vital since it guarantees the model keeps running properly and may be modified as required to satisfy user needs and expectations.

How to Develop a Large Language Model: Step-by-Step Guide to Building LLMs

Developing large language models involves several key steps, which are outlined below:

Define the Objectives of Your Large Language Model

Establishing clear objectives for your large language model (LLM) is crucial to its success. These objectives should align with the specific needs of your organization or project, such as improving customer service through automated responses or generating creative content like poetry or stories. By articulating these goals, you create a focused blueprint that guides the process of building LLMs, ensuring the model is trained to meet desired outcomes.

This clarity helps in selecting appropriate data, designing the architecture, and evaluating the model’s effectiveness, ultimately leading to a more targeted and efficient development journey.

Collect and Prepare Data

Data collection and preparation are foundational steps in developing a large language model. Start by gathering a diverse and extensive dataset relevant to your objectives, ensuring it encompasses various text types, styles, and contexts. This diversity enhances the model’s ability to generalize across different applications. Once collected, the data must be cleaned and preprocessed to remove noise, such as irrelevant information or formatting issues. Techniques like tokenization, normalization, and deduplication should be applied to ensure the data is structured and suitable for training. A well-prepared dataset significantly influences the model’s performance and accuracy.

Design the Model Architecture

Choosing the right model architecture is a critical step in the development of large language models. The Transformer architecture is the most common choice due to its efficiency in handling sequences and understanding context. When designing the architecture, consider factors such as the number of layers, attention heads, and embedding dimensions, tailoring them to fit your specific needs and objectives. Customization may involve adjusting parameters to optimize performance for your particular application. A well-designed architecture enables the model to learn effectively from the data, facilitating better understanding and generation of human-like text.

Train the Model

Training the model is a pivotal phase in the development of large language models. Utilize frameworks like TensorFlow or PyTorch, which provide robust tools for building and training neural networks. During training, the model learns to predict the next word in a sequence by adjusting its weights based on the training data. This process involves selecting appropriate hyperparameters, such as learning rate and batch size, to optimize performance. It’s essential to monitor the loss function and validation metrics throughout training to ensure the model is learning effectively and not overfitting to the training data.

Evaluate and Improve the Model

Once training is complete, evaluating the model’s performance is essential to ensure it meets the established objectives. Use predefined metrics, such as accuracy, perplexity, and F1 score, to assess how well the model performs on validation datasets. This evaluation process allows you to identify strengths and weaknesses, guiding necessary adjustments. Iteration is key; based on evaluation results, revisit the model architecture, training data, and hyperparameters to refine the model. Continuous improvement ensures the model evolves to meet user expectations and adapts to changing requirements in real-world applications.

Model Deployment

After reaching satisfactory performance, the next stage is model deployment. This requires integrating the trained model into a production environment where it can respond to real-time user requests. Deployment tactics may vary depending on the application, such as employing cloud services or on-premises solutions. It’s crucial to set up robust APIs that allow seamless interaction with the model. Additionally, ensure that the deployment infrastructure is scalable to manage variable loads. A successful deployment guarantees that the model delivers its capabilities successfully, giving value to users in practical applications.

Monitor and Maintain the Model

Monitoring and sustaining the model post-deployment are critical for its long-term success. Continuously track the model’s performance, gathering feedback and assessing user interactions to discover any reduction in accuracy or relevance. The model may need regular updates and retraining to remain in line with changing language usage and user expectations. Implementing a feedback loop enables for continual changes based on real-world data. By prioritizing maintenance, you ensure that the large language model stays effective, relevant, and capable of adjusting to new challenges and possibilities in the dynamic field of natural language processing.

Future of LLMs

The future of large language models seems promising, with various trends affecting their evolution:

Increased Accessibility

The future of large language models is distinguished by improved accessibility, driven by developments in user-friendly tools and platforms. As these technologies evolve, they allow a greater range of persons and organizations, including those with less technical ability, to join in large language model creation. Open-source LLM frameworks, pre-trained models, and thorough documentation empower users to develop and tweak their own models. This democratization of AI not only drives innovation but also supports different uses across numerous industries. As accessibility improves, we can expect a boom in inventive uses of LLMs that address distinct issues and demands across diverse industries.

Ethical AI Development

As the focus on ethical AI development develops, corporations are prioritizing the production of responsible large language models (LLMs) that address prejudice and fairness. The ethical implications of AI technology are being increasingly acknowledged, driving developers to build norms and frameworks that limit potential downsides. This includes reviewing training data for biases, assuring diverse representation, and developing transparent standards in model deployment. Organizations are also participating in continuing talks with stakeholders to understand the societal impact of their innovations. By adhering to ethical standards, companies may gain confidence with users and create LLMs that contribute positively to society.

Integration with Other Technologies

The integration of large language models with other AI technologies is anticipated to boost their capabilities dramatically. As corporations seek to construct more sophisticated applications, LLMs will increasingly operate in unison with computer vision, speech recognition, and machine learning systems. This convergence allows for multimodal applications, where text, images, and audio can be processed simultaneously to give richer user experiences. For example, LLMs could fuel chatbots that grasp visual context from photos or video, leading to more interactive and engaging interactions. This connection will encourage innovation and provide new paths for LLM applications across numerous disciplines.

Real-time Adaptation

Future large language models (LLMs) will feature the ability to adjust in real-time based on user interactions, considerably boosting customization. This dynamic feature will allow LLMs to learn from continuous talks, adapting replies to individual preferences and settings. By employing approaches such as reinforcement learning and continuous feedback loops, these models can enhance their performance and relevance over time. Real-time adaptation will help organizations to provide more relevant and context-aware interactions, ultimately leading to better user happiness. As Large language models improve to become more responsive, they will play a critical role in generating individualized experiences across varied applications.

Collaboration Across Industries

The future of large language models (LLMs) is characterized by collaboration across numerous industries, including healthcare, finance, and education. As enterprises grasp the revolutionary potential of LLMs, they are increasingly cooperating to use these technologies for new solutions. For instance, healthcare professionals may partner with AI developers to design models that assist in diagnosis and patient communication, while financial organizations could leverage LLMs for automated report preparation and risk assessment. Such interdisciplinary cooperation will deliver powerful applications that benefit numerous industries, driving breakthroughs in efficiency and effectiveness. This joint approach will be vital for harnessing the potential of large language models in tackling difficult situations.

Final Take

Hopefully, you are clear about how you can proceed ahead with the development of a large language model. It is important to understand that building LLMs is a daunting task that requires careful planning, precise execution, and ongoing maintenance throughout the process. If you can follow the above-specified steps and use the right resources like A3Logics, you are bound to experience the outcome you have in your mind. Being the best LLM development service provider, you can expect nothing but the top-quality service all the time. Good luck!