Table of Contents

Artificial intelligence is one of the most promising technologies of today with the capability to resolve complex issues, augment human abilities, and enhance lives around the sector. However, as artificial intelligence development services become more advanced and incorporated into society, they pose actual risks that would negatively affect jobs, privacy, protection, and fairness. From algorithmic bias to threats of job displacement and autonomous harm, the AI risk requires attention and prudent action. This blog will provide an ultimate guide on how to effectively manage the risks of AI to maximize its benefits for society. We will discuss the major risks, ranging from job disruption to threats to privacy and security.

We will also outline practical strategies like governance, ethics, transparency, and education that can help ensure AI develops in a safe, fair, and responsible manner to truly augment human capabilities rather than replace or harm us.

Gain a Competitive Edge With Responsible AI Development

The rapid growth and adoption of AI technologies

AI technologies are improving at an astonishing pace and spreading rapidly through society. This fast-moving transformation holds great promise but also brings challenges we are unprepared for. AI is improving and spreading amazingly fast. It seems to change every few years.

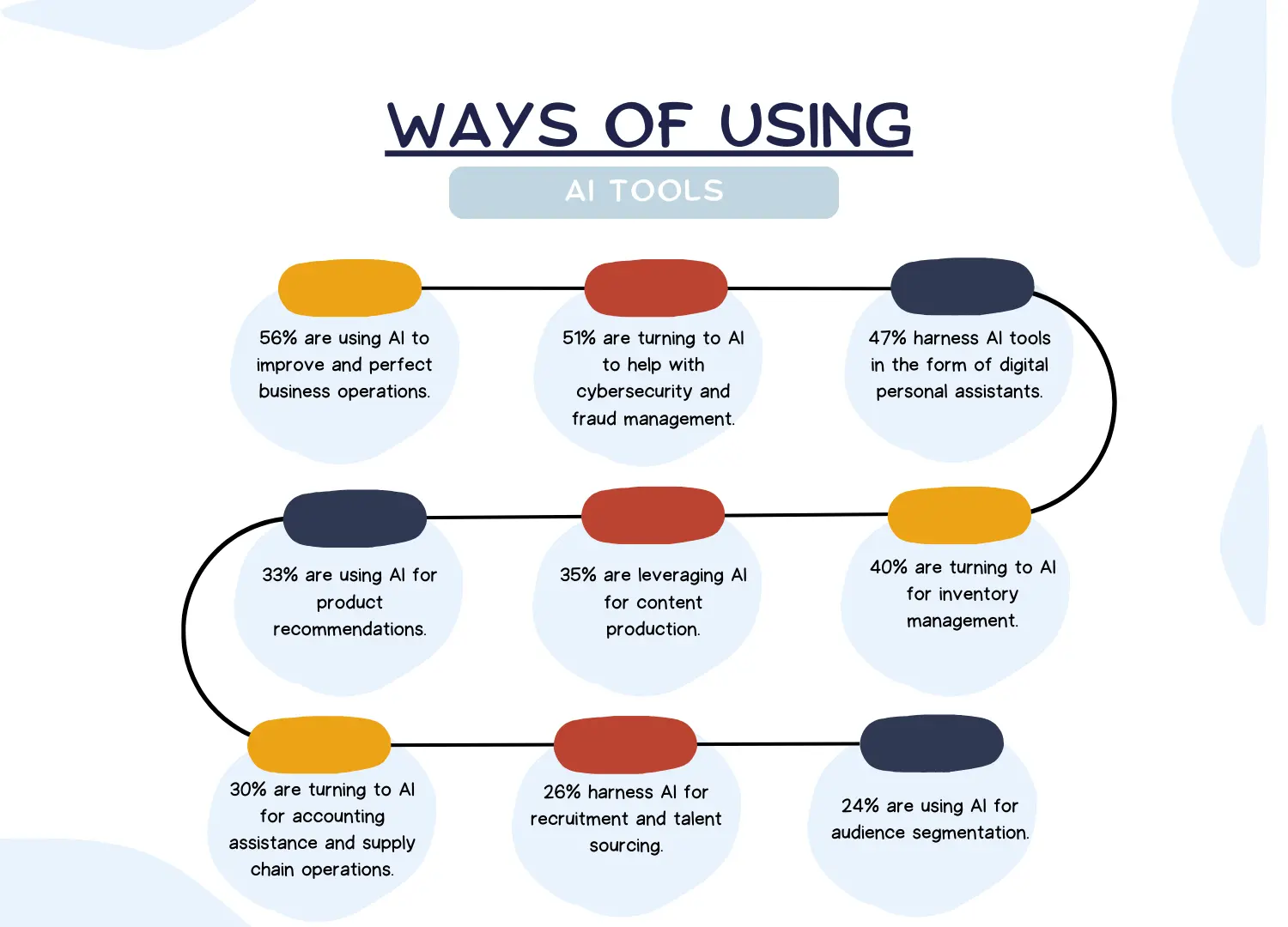

Big tech invests billions to push limits. Faster AI chips speed up its work. Most top artificial intelligence solution companies use AI for tasks. Many startups only exist because of AI. More businesses see AI as normal. Many people use AI without knowing.

The workplace is changing as artificial intelligence advances quickly. Increasing numbers of people are starting to benefit from this. Still, the full influence of artificial intelligence on society is yet unknown. AI is expected to completely transform every element of human life in ways we are not yet completely clear about. Governments, businesses, and people appear ready for the approaching AI-centric future. Still, experts in the field project that the development of artificial intelligence will keep quick shortly. The incredibly fast growth of AI brings huge opportunities. But also, big dangers that need to be dealt with through wisdom and planning. How we handle the next few years of AI may determine if it helps or harms humanity for a long time.

Importance of managing AI-related risks

Companies use AI to simplify work. But, it can cause problems too which require fixing.

Some AI risks:

- Unfair – AI can treat some groups worse unintentionally. Companies must check if AI works fairly for all.

- Hard to understand – We don’t sometimes understand the AI algorithms which makes people not trust AI.

- Job loss – AI can replace human jobs.

- Mistakes – AI can fail and hurt people.

- Hacked – Hackers target AI. They steal data or control AI.

- No rules – Few rules govern how to build and use AI best which leads to consfusion.

To use AI well, top artificial intelligence solution companies must:

- Find which risks apply to their AI

- Make processes to manage the risks

- Check AI for unfair biases

- Make AI explain its decisions

- Build AI with fairness and ethics in mind

Not managing AI risks can cause:

- Financial loss

- Lost Reputation

- Legal trouble

- Loss of trust in AI

So companies must watch for AI risks and fix problems to safely benefit from AI. Managing risks well helps AI work.

Understanding AI Risks

The innovative technology of artificial intelligence has the power to completely transform a wide range of industries. Like any potent technology, though, it also brings up several significant societal and ethical issues. These include the possibility of social manipulation, algorithmic bias, privacy issues, and employment displacement brought on by automation. The development and responsible application of AI necessitates tackling these issues head-on. Let’s investigate the essential facets of AI ethics and society, where we will thoroughly examine every issue and its ramifications and offer viable solutions to reduce the risks.

Data Privacy and Security Risks

While AI offers many benefits, ensuring data privacy and security is critical to responsibly managing AI risks. AI relies on huge amounts of data to train systems and improve algorithms. But data used by AI faces risks that must be addressed:

- Data breaches – Hackers target AI systems to steal valuable training data containing sensitive information. Top artificial intelligence companies collecting vast datasets become attractive targets.

- Unauthorized access – Employees or contractors with access to AI training data could misuse or leak sensitive data. Insider threats are difficult to detect.

- Data anomalies – Errors or anomalies in training data can undermine the accuracy of AI systems and produce biased outcomes. Audits are required to catch such issues.

- Regulatory compliance – Many jurisdictions have regulations governing the collection, storage, use, and protection of data used by AI systems. Organizations need to comply to avoid consequences.

To control data privacy and protection risks:

- Audit facts robotically – Check for anomalies, symptoms of tampering, or coverage violations.

- Update processes – As AI systems evolve, update data management processes to maintain security.

- Employ AI techniques – Use AI to detect threats, analyze data flows, and flag anomalies.

Data is the “fuel” that powers AI. However, data breaches, unauthorized access, poor data quality, and non-compliance present serious risks. The responsible management of AI necessitates the effective protection of AI training data. It can be done through measures such as access control, encryption, auditing, and AI-assisted monitoring. Neglecting to do so could endanger an organization’s reputation, data assets, and AI systems.

Are You Looking for Mitigating The Risks of AI?

Bias and Fairness in AI

Ensuring AI systems are fair and unbiased is essential to responsibly managing related risks. AI systems are only as far as the data and assumptions that go into building them. And data often reflects human and societal biases.

AI trained on biased data can discriminate against certain groups without intending to. Facial recognition AI, for example, has struggled to accurately identify non-white faces. AI algorithms have inherent design biases based on how engineers define “good” outcomes and “optimize” for them.

To build fair and unbiased AI systems, organizations should:

- Audit datasets for biases related to protected attributes like race, gender, age, and disability. Remove or correct biased data before training AI.

- Evaluate outcomes for fairness across demographic groups to identify disparate impact.

- Refine AI algorithms to optimize for fairness in addition to accuracy.

- Involve diverse stakeholders in the AI design process to surface hidden biases.

- Only deploy AI where enough unbiased data exists to produce fair and accurate results.

- Monitor AI systems in use to detect and correct emerging biases over time.

To manage the risks of AI, top artificial intelligence solution companies must proactively identify and mitigate the bias inherent in datasets, algorithms, and human decision-making. Reducing bias and increasing fairness requires a multi-disciplinary approach that combines technology, data science, ethics, and organizational practices.

Explainability and Transparency

Increasing the explainability and transparency of AI systems is critical to managing related risks ethically and responsibly. Artificial intelligence development services work as a “black box” where decisions and results are often inscrutable. Lack of visibility into how AI arrives at conclusions creates risks including:

- AI outcomes seem arbitrary and opaque, undermining trust

- Models behave in unexpected ways that are difficult to debug and correct

- Systems potentially discriminate or harm without explanations

To increase AI explainability and transparency:

- Require AI to explain its reasoning and decisions, even if imperfectly

- Provide ways to interrogate models to understand key factors behind results

- Open up “white box” models that expose the inner workings of algorithms

- Monitor AI performance with telemetry to detect anomalies or unfair outcomes

- Audit Artificial intelligence development services regularly to ensure reliability and alignment with ethical goals

- Disclose model limitations and potential risks to stakeholders

Regulation and Compliance

Complying with rising legal guidelines and regulations is crucial to responsibly handling dangers related to AI technology. While AI gives many advantages, the government is increasingly enforcing rules to protect citizens from capability damage. Key rules intend to:

- Ensure AI is used pretty and ethically

- Increase transparency of computerized selection-making systems

- Protect facts privateness and safety of individuals and companies

- Require human oversight of excessive-risk AI packages

- Mitigate financial dislocations as a result of AI and automation

To comply with AI regulations, organizations ought to:

- Identify applicable laws based totally on jurisdictions, industry, and AI use instances

- Evaluate AI structures to determine risks and compliance desires

- Obtain legal recommendations specialized in AI regulations

- Audit AI models for compliance troubles like bias, loss of transparency

- Implement technical and organizational measures aligned with regulations

- Monitor regulatory landscape for AI and adjust strategies as needed

Complying with rules enables top artificial intelligence companies:

- Avoid penalties and sanctions

- Maintain customer, stakeholder, and regulatory trust

- Leverage best practices to manage related risks responsibly

- Mitigate legal liabilities that could result from non-compliant AI

To responsibly manage AI risks, it is important to maintain accountability and confidence in their AI systems. Organizations must comply with emergent AI laws and regulations. Non-compliance could lead to substantial penalties, diminished trust, and impeded adoption of these transformational technologies.

Human-AI Collaboration

Collaboration between humans and AI can help mitigate risks and unlock responsible development of these technologies. While AI offers many benefits, reliance on AI alone poses risks like lack of explainability, bias, and system failure.

AI enables:

- Speed and scale of processing data

- Ability to detect complex patterns

- Continuous optimization of outputs

– Ethics and values to guide AI

– Ability to provide context and common sense

– Interpretability of AI outputs

– Flexibility and adaptability

Together, humans and AI can:

- Mitigate risks like AI bias, misuse, and errors that neither could effectively address alone

- Design ethical AI systems through human values embedded from the start

- Catch edge cases and exceptions AI cannot handle independently

- Evolve dynamically in complex, real-world environments

- Build trust through a shared division of labor

Transitioning to a model of human-AI collaboration can help mitigate AI risks. It also ensures ethical and effective deployment and unleashes the full range of benefits these technologies offer. This will necessitate the development of AI that enhances human work rather than merely automates it, while also promoting AI transparency, interpretability, and “human-in-the-loop” systems.

Unlock New Horizons of Success with our Artificial intelligence services provider

Get in Touch with A3Logics

AI Governance and Risk Management

Establishing governance structures and processes is critical to managing AI risks effectively and responsibly. While Artificial intelligence solutions offer benefits, failing to manage risks can cause serious harm. Effective governance helps organizations deploy AI responsibly and sustainably.

Governance involves:

- Developing policies for ethical and safe AI use

- Defining roles and responsibilities for AI development, oversight, and implementation

- Implementing processes for assessing and mitigating AI risks

- Ensuring compliance with relevant laws and regulations

- Monitoring AI performance to detect and correct issues

- Auditing AI models for accuracy, bias, and explainability

- Obtaining stakeholder input on AI applications and their impacts

- Implementing human oversight and control measures as needed

Effective AI governance:

- Aligns AI deployment with organizational values and ethics

- Identifies and manages legal, financial, reputational, and other risks

- Balances AI innovation, adoption, and growth with responsible use

- Improves transparency, trust, and accountability of AI systems

- Monitors AI’s societal impacts and adjusts governance over time

Strong AI governance involves establishing structures, policies, processes, and controls that enable ethical and responsible AI use. It allows organizations to actively manage AI risks, maximize benefits, and build trust over the long term. AI governance serves as the foundation for an organization’s responsible usage of technologies that will profoundly transform society and our lives.

Cybersecurity and AI risk management

Protecting AI systems from cyber threats is essential for responsible risk management as they become more prevalent.

AI relies on data and networks, making it vulnerable to attacks. Cybersecurity helps:

- Protect AI training data- Encrypt data to prevent hackers from stealing it

- Secure AI models – Use access controls and monitoring for AI models

- Address AI safety issues- Plan for risks of hacked AI systems harming people

- Identify AI threats – Use AI to detect threats targeting AI networks

Cybersecurity practices adapted for AI’s unique needs help organizations manage the risks of hacked or compromised systems. This protects AI, data assets, and people from threats – letting organizations pursue the benefits of AI technologies while minimizing security risks.

Robustness and Reliability

Ensuring AI systems work consistently and withstand unexpected conditions is critical to managing risks responsibly. Robust and reliable AI:

- Performs accurately in the real world where conditions change

- Handles unknown inputs, edge cases, and abnormal situations

- Operates stably over time with resilience to failures

- Provides dependable results without unpredictable behavior

Achieving robustness and reliability requires identifying limitations, exposing AI to a wide range of situations, and integrating human oversight. This ensures AI systems work as intended and can withstand unexpected conditions, minimizing risks of unpredictable failures and harm.

For an artificial intelligence developer to responsibly manage AI risks, robustness engineering, and reliability are foundational requirements for all impactful AI applications.

Unintended Consequences of avoiding AI risks

While taking steps to manage AI risks is important, avoiding AI altogether can also cause unintended consequences.

Attempting to:

- Ban or severely restrict artificial intelligence services use

- Slow the pace of AI innovation and adoption

May have unintended results:

- Reduced economic growth

- Lost opportunities for societal good

- Inequitable access to AI benefits

- Concentrating AI in fewer organizations

- Difficulty managing global AI already created

- Others gaining competitive advantages with AI

While managing AI risks is critical, completely avoiding AI is not a viable long-term AI solution provider. Rather than avoidance, the challenges of responsible AI require ethical principles, governance structures, transparency, international cooperation, and innovative risk mitigation strategies – enabling society to maximize AI’s benefits while minimizing potential harms.

Managing AI Risks in Critical Industries

AI offers benefits for many industries but brings unique risks for critical sectors like healthcare, transportation, and defense.

For healthcare, AI risks include:

- Inaccurate diagnoses harming patients

- Bias against certain groups in treatment decisions

- Lack of explainability eroding trust in AI tools

In transportation, risks include:

- Autonomous vehicle failures causing accidents

- Hacking of self-driving systems

- Job losses for drivers

With defense and security, risks include:

- Lethal autonomous weapons harming civilians

- Bias in AI used to determine threats

- Adversarial AI attacks

Critical industries must:

- Rigorously test artificial intelligence service for safety and accuracy

- Ensure AI is transparent and explainable

- Take human factors into account

- Implement multi-layered oversight

- Increase security and resilience to threats

- Work with regulators to develop standards

As AI gets deployed by artificial intelligence developers in life-critical fields, risks that may be acceptable in other industries become intolerable. This requires strengthening risk management, oversight, governance, and security for AI – with cautious, gradual implementation and expanded testing – to realize benefits while avoiding significant harms in sectors like healthcare, transportation, and national security.

Conclusion

As we continue advancing AI technologies, it is vital that we effectively manage the associated risks. While AI promises many benefits, it also poses real threats that could negatively impact society if left unaddressed. From job displacement and bias to privacy concerns and unpredictable behavior, the risks are serious and require prudent planning and governance.

With careful thought and collaboration among experts, leaders, and citizens, we can develop ethical principles, security measures, and policy frameworks to help shape a responsible trajectory for AI. With foresight and knowledge, we can harness AI’s full potential and even minimize its risks – enabling the era to augment human skills and ultimately enhance the existence of all of humanity. Now is the time to come together and make sure that AI becomes a force for truth within the world.

Frequently Asked Questions (FAQs)

What is artificial intelligence with examples?

Artificial intelligence (AI) is a technology that mimics human intelligence. Common examples of AI consist of machines that can see, concentrate, speak, analyze, and make decisions. AI systems accomplish tasks like:

- Automatic speech recognition – like Siri and Alexa

- Computer vision – like self-driving cars and facial recognition

- Machine learning – where AI systems improve by learning from data

- Natural language processing – like chatbots that can converse

Normal programs are rigid and follow set instructions. But AI systems can:

- Sense their environment and acquire information

- Analyze complex information and patterns

- Adapt their behavior and actions based on what they learn.

AI focuses on creating intelligent machines that work and react like humans. Unlike conventional technology, AI systems are “trainable” through data and experience rather than explicitly programmed.

How can we avoid the risk of AI?

While AI offers many benefits, it also poses risks that must be responsibly managed. To mitigate AI risks, organizations should:

- Understand the potential risks of their specific AI applications. This includes risks like bias, lack of transparency, and safety issues.

- Assess AI systems for risks through audits, testing, and stakeholder input. Check for bias, accuracy, security vulnerabilities, and more.

- Address issues like fairness, explainability, and robustness by designing AI the right way from the start.

- Implement policies, processes, and governance to oversee AI development and use. This includes oversight, compliance, and mitigation measures.

- Foster transparency by making the inner workings and potential limitations of AI understandable. This builds public trust.

Rather than avoiding AI altogether, the key is managing AI risks through a combination of understanding risks, designing ethical AI, implementing controls, fostering transparency, and leveraging human expertise.

What are the risks of artificial intelligence?

Some of the potential risks associated with artificial intelligence technology are:

- Job displacement: As AI automates tasks and roles, it could threaten many jobs except people who are retrained.

- Bias: AI systems are often skilled on biased facts, that may result in unfair consequences that discriminate in opposition to certain organizations.

- Opaque choice-making: The selections of complex AI structures are frequently inscrutable, making it tough to understand how they reach their conclusions.

- Loss of management: As AI systems become more self-sustaining, there are issues we might also lose the capacity to fully manage them.

- Privacy threats: The large amounts of statistics used to strengthen AI ought to reveal people’s private facts.

- Autonomous harm: There are scenarios in which autonomous AI structures could cause harm if they act in surprising ways.

How do you address AI risks?

There are several approaches we will cope with and mitigate the dangers of synthetic intelligence:

- Governance – Develop regulations, oversight of our bodies, and moral ideas to manipulate how AI is developed and used.

- Transparency – Make AI structures greater transparent so people apprehend how decisions are made.

- Testing – Thoroughly check AI structures for dangers, biases, and vulnerabilities earlier than deployment.

- Education and Awareness – Educate employees, the general public, and leaders about AI dangers and responsibilities.

- Diversity – Increase diversity in AI facts sets and amongst AI builders to reduce accidental biases.

- Limit Autonomy – Limit the autonomy of AI systems and preserve meaningful human oversight.

- Job Training – Provide process training, re-skilling, and social aid to assist workers impacted by way of AI.

- Multistakeholder Cooperation – Bring collectively experts, leaders, and residents to increase balanced answers.