Table of Contents

With the wave of digitization that is sweeping through industries, data is essential to how organizations are shaped. It serves as the foundation for decision-making procedures and serves as the impetus for innovation. Data Lake, a novel idea is creating quite a stir in this sea of data. Mordor Intelligence projects that the market will grow significantly. Increasing from $13.74 billion in 2023 to $37.76 billion by 2028, with a compound annual growth rate (CAGR) of 22.40% over the five-year forecast period. Setting aside the numbers, let’s look at what data lakes in data engineering are. and how best to handle them.

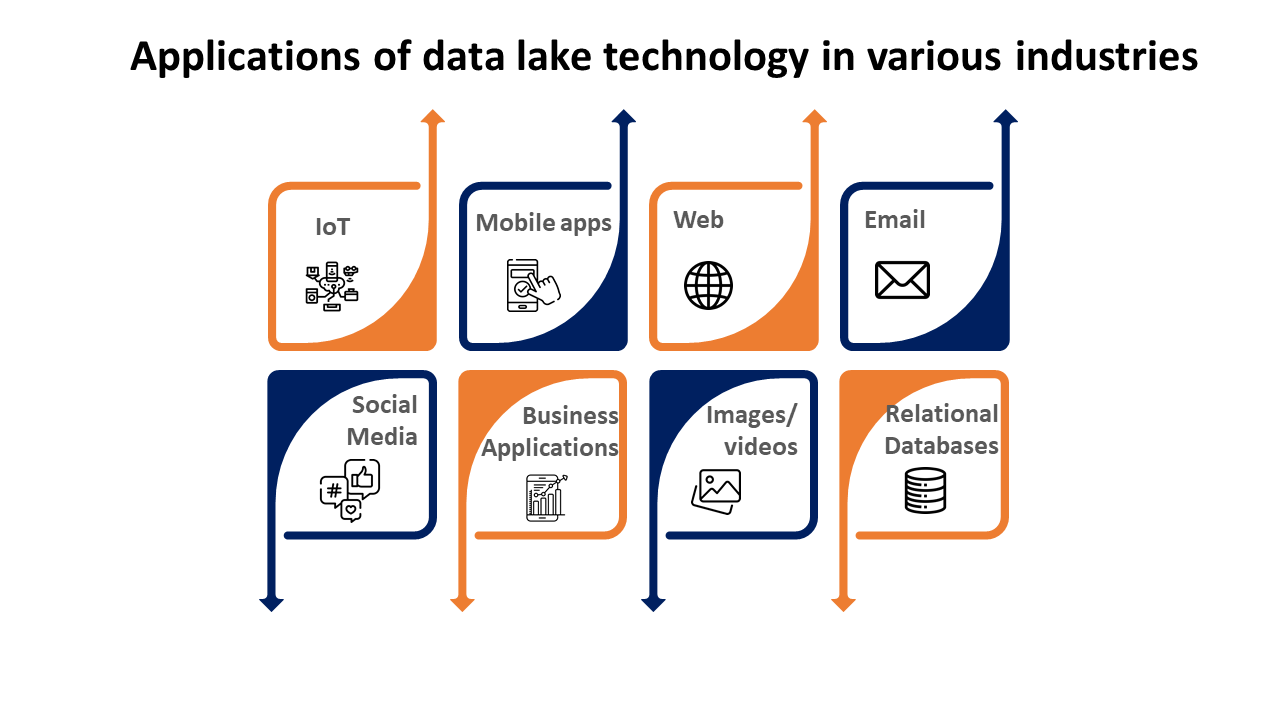

Companies can outperform rivals in a market that is changing quickly by using data analysis to extract business insights. Data analysis is made even more beneficial by the large volumes of data. Many firms have access to this data. However, there is an overwhelming amount of data containing unstructured data from various sources. Specifically sources like websites, social media posts, and Internet of Things (IoT) devices. This can also present new difficulties. Organizations can store all this unstructured data along with structured data from databases and core business applications in data lakes, which allows them to analyze the data. Through the examination of this vast array of data from many sources, organizations can produce insightful discoveries that enhance their operations.

What is a Data Lake?

A data lake is a central repository for data storage. Data Lakes contain large amounts of data that require processing and fine-grain data in its original format. Structured, semi-structured, and unstructured data are all in one single store repository.

When there are no restrictions on file types or set storage requirements and a focus on flexible format storage for future usage, a data lake in data engineering is utilized. For faster data retrieval in a data lake, the use of metadata tags, and IDs is there in the architecture of the data lake.

James Dixon, the Chief Technology Officer of Pentaho, was the one to coin the term “data lake”. It was to distinguish it from the more sophisticated and processed data warehouse repository. Data lakes in data engineering are becoming more and more popular. Particularly with enterprises that want extensive, all-encompassing data storage.

Data lakes store data without filtering it beforehand, and access to the data for analysis is inconsistent and ad hoc. Until the data is required for analysis, it is not converted. However, to guarantee data accessibility and usefulness, data lakes require routine upkeep as well as some kind of governance. Data lakes are called “data swamps” if they are not properly maintained and become unusable.

Future Proof Your Business With a Data Lake

Concept of Data Lakes

Could you imagine Amazon not making use of a data lake? Doing nothing with the massive volumes of data that are being fed into the Amazon servers would be a waste of both money and business intelligence.

Amazon Web Services (AWS) data lake stores large volumes of data. These data lakes are then processed, analyzed, and utilized to provide useful business intelligence to Amazon customers. Although data lakes and data warehouses sometimes merge, there are a few key distinctions that we need to keep in mind.

Data Lake Types

A data lake implementation can be in one of two main ways: on-site or on the cloud. These are the principal variations.

Cloud Data Lakes

You can access cloud-based data lakes via the internet, and they are powered by hardware and data lake software housed in a supplier’s cloud. The majority use a pay-per-use subscription basis. Cloud data lakes are easy to scale; all you need to do is add more cloud capacity as your data grows. The data lake providers take care of performance, security, dependability, and data backup so you can concentrate on choosing which data to add to the data lake and how to analyze it.

On-Premise Data Lakes

Installing and running data lake software on servers and storage in your company’s data center is how an on-premises data lake is operated. Hardware and software licenses require a capital expenditure, and installing and maintaining the data lake will require IT know-how. You are in charge of overseeing security, safeguarding information, and making sure performance is sufficient. As the data lakes expand, you might have to move them to bigger edge computing systems. An on-premises system can offer higher performance to users located within the company’s facilities.

Data Lake vs Lakehouse vs Data Warehouse

| Feature | Data Lake | Data Warehouse | Data Lake House |

|---|---|---|---|

| Data Type | Unstructured and semi-structured data | Structured data | Both structured and unstructured data |

| Storage Cost | Low-cost storage1 | Higher cost due to specialized storage | Low-cost storage with structured access |

| Processing | Requires additional processing for analysis | Optimized for read-heavy operations | Combines low-cost storage with optimized processing |

| Data Schema | Schema-on-read | Schema-on-write | Supports both schema-on-read and schema-on-write |

| Use Cases | Machine learning, data science, exploratory analytics | Business intelligence, reporting, OLAP | Both BI/reporting and machine learning/analytics |

| Data Consistency | May lack consistency without additional governance | High consistency and reliability | Improved consistency with unified data management |

| Data Duplication | Possible due to lack of structure | Minimized due to structured environment | Reduced due to integrated architecture |

| Exchanging Patient Information | EDI 275 | Share patient information with stakeholders | Receive and integrate patient information |

| Data Management | Less mature, requires additional governance | Mature data management and governance | Advanced data management capabilities |

| Performance | Can be slower due to unstructured nature | Optimized for performance with structured data | Balances performance with flexibility |

| Scalability | Highly scalable | Scalability can be expensive | Highly scalable and cost-effective |

| Flexibility | High flexibility | Less flexible due to structured nature | Highly scalable and cost-effective |

| Complexity | Can be complex due to unstructured data handling | Lower complexity due to structured data | Simplifies architecture by combining both elements |

| Data Integration | Requires ETL processes for structured queries | Requires ETL for data intake | Minimizes need for ETL, supports ELT |

Architecture of Data Lakes

Different data structures and unstructured data from various sources within the company can be accommodated by a data lake architecture. Every data lake platform consists of two parts: computing and storage, which can both be housed in the cloud or on-site. Cloud and on-premises locations can be combined with the data lake design.

The amount of data that a data lake will need to hold is difficult to estimate. Because of this, data lake design offers greater scalability—up to an exabyte—something that traditional storage systems are unable to accomplish. To guarantee accessibility in the future, metadata should be applied to the data when it is applied to the data lake.

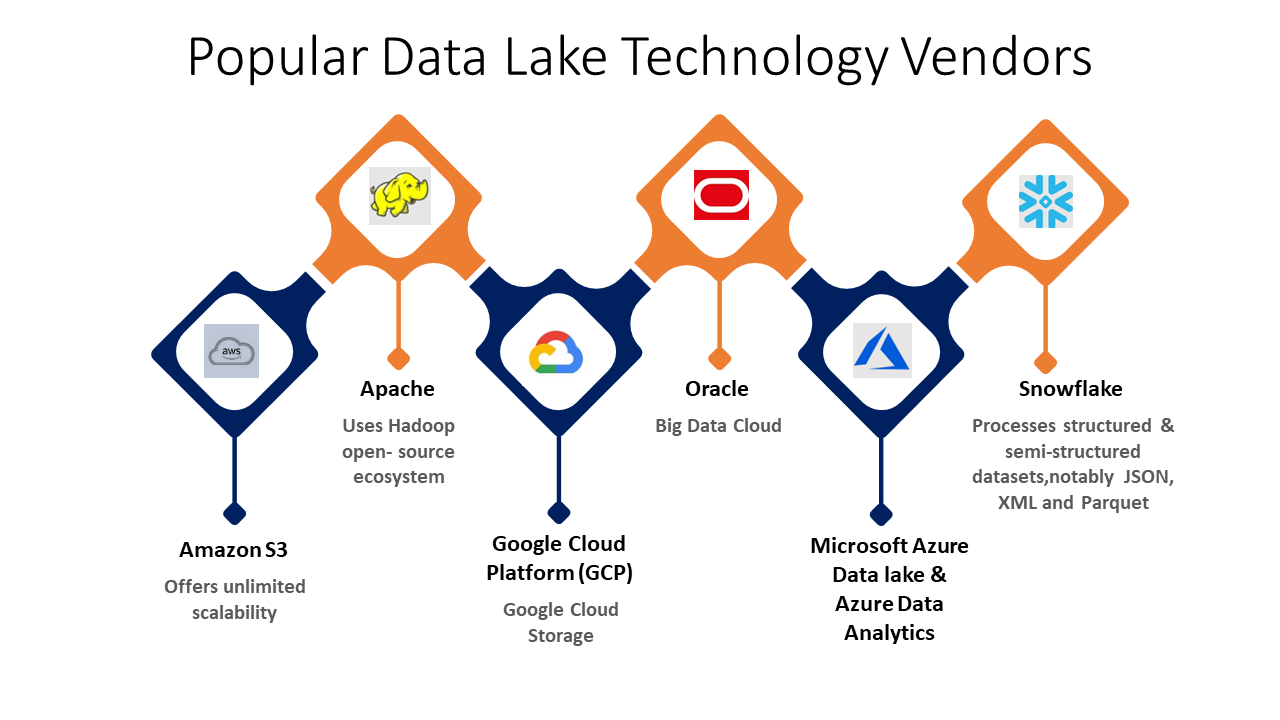

The architecture and approach of data lake tools, including Hadoop and Amazon Simple Storage Service (Amazon S3), differ. Data in a data lake is arranged and made easier to access and utilize with the help of data lake architecture software. The following elements should be included in a data lake architecture to guarantee data lake functionality and stop a data swamp from growing.

- – Applying data quality management and classifying data items is made easier by using data profiling technologies.

- – Content, data type, user situations, and potential user groups are all included in the taxonomy of data classification.

- – File organization using naming guidelines.

- – Data lake user access tracking system with alarm signal issued at the access point and time Data catalog search functionality.

- – Data encryption, access control, authentication, and other data security measures are all included in data security, which guards against unwanted access.

- – Instruction and awareness regarding the use of data lake as a service.

Glossary For The Concept of Data Lakes

The following list of essential data lake topics will help you gain a deeper grasp of data lake tools.

Data Ingestion

The act of gathering and loading data into the data lakes from various data sources is known as “data ingestion.” All data structures, including unstructured data, are supported by the process. Additionally, batch and one-time ingestion are supported.

Data Security

The important role of data engineers is to put the data lake’s security procedures into place. It entails overseeing data security as well as the loading, searching, storing, and accessibility of the data lake flow. Data lakes also require other aspects of data security, like accounting, authentication, data protection, and access control to prevent unwanted access.

Data quality

Since decisions are made using information from data lake services, the data must be of a high caliber. Bad judgments resulting from low-quality data can have disastrous effects on the organization.

Data Governance

Data governance is the process of overseeing and controlling an organization’s data. This includes checking the data’s availability, security, integrity, and usefulness. This is the role of data engineers.

Data discovery

Before data preparation and analysis, data discovery is crucial. It is the procedure for gathering data from various sources, combining it into a lake, and using tagging strategies to identify trends that improve data interpretability.

Data Analytics

Data analytics is the last step in the process; data exploration comes first in data engineering services. It helps choose the appropriate dataset for the investigation.

Data storage

When it comes to data storage, it should be affordable, scalable, capable of handling a variety of data types, and conveniently and quickly accessible.

Data auditing

It makes it easier to assess risk and ensure compliance by keeping track of any alterations made to important data components. It also helps to determine who made the modifications, how the data was modified, and when they were made.

Data lineage

This refers to the movement of data within the data lakes and its path from its source or origin. Error fixes in a data analytics process are streamlined by data lineage from the source to the destination.

Start Building Your Data Lake Today to Gain Actionable Insights From Your Data

The Best Practices for a Data Lake?

Best data lake solutions must function as a central store for both structured and unstructured data, enabling data consumers to extract information from pertinent sources to support a range of analytical use cases, to provide value to both technical and business teams. By ingesting, storing, and maintaining data according to the best practices below, we can accomplish this.

1. Data ingestion can be complex

The process of gathering or taking in data into object storage is known as data lake ingestion. Because data lakes let you keep semi-structured data in its original format, ingestion is easier in a data lake design than in a data warehouse.

Data intake is still crucial, though, so you should consider it as soon as possible. This is because improper data storage can make it challenging to access your information in the future. Furthermore, effective data ingestion can assist in resolving functional issues such as guaranteeing exact-once processing of streaming event data and optimizing storage for analytical performance.

2. Make several copies of the information.

Since we are storing the data unstructured and separating computing from storage, one of the primary motivations to embrace data lake services is the possibility of storing massive volumes of data. There is also a relatively modest investment. Utilizing your newly acquired storage capacity, you ought to store both unprocessed and processed data.

When it comes to error recovery, data lineage tracing, or exploratory analysis, among other uses, having a copy of the raw historical data in its original format can come in handy. On the other hand, the data utilization for analytical workflows needs separate storage. It must be custom-made for analytic consumption to ensure quick reads. However, this data would be accessible on need.

While using pricey and laborious database storage would make this kind of duplication seem heretical, in the world of managed infrastructure today (such as an AWS data lake), storage is inexpensive and there are no clusters to scale, making this kind of action feasible.

3. Establish a policy for retention

Although it may seem counterintuitive to the preceding advice, you shouldn’t keep all of the data indefinitely just because you wish to save part of it for longer lengths of time. The following are the primary reasons you might want to delete data:

- Compliance: Legal obligations, like the GDPR, may force you to erase personally identifiable data at the request of the user or after a predetermined amount of time.

- Cost: Data lake storage is inexpensive, but it’s not free; your cloud expenses will rise if you move hundreds of terabytes or petabytes of data every day.

Additionally, you’ll need a mechanism to enforce any retention policies you set. To do this, you’ll need to be able to distinguish between data you want to keep for the long run and data you want to delete, as well as pinpoint exactly where each type of data is located in your object storage layer (S3, Azure Blob, HDFS, etc.).

4. Recognize the information you are importing.

While it is true that the whole idea behind data lakes is to “store now, analyze later,” going in blind will not work effectively. It should be possible for you to comprehend the data as it is being ingested in terms of sparsely populated fields, each data source’s format, etc. By allowing you to create ETL tool pipelines based on the most accurate and readily available data, gaining this visibility on read rather than attempting to infer it on write can save you a great deal of difficulty later on.

5. Divide up your information

Partitioning your data limits the amount of data that query engines, like Amazon Athena, must scan to deliver answers for a particular query. It therefore lowers query costs and improves performance.

The size of the partition should depend on the kind of query we plan to perform. Typically, The distribution of data is by timestamp, which can imply by hour, minute, or day. To scan less data, we might wish to use hourly partitioning instead of daily if the majority of our queries require data from the previous 12 hours.

6. Readable File Formats

You should store the data you intend to utilize for analytics in a format like Apache Parquet or ORC since columnar storage makes data easy to read. These file formats have the benefit of being open-source as opposed to proprietary, which means you can read them using a range of analytic services, in addition to being optimized for reads.

Don’t forget, data needs decompression before it can be read. Therefore, even while it makes financial sense to compress your data, you should select a relatively “weak” compression method to avoid wasting computer power.

7. Combine small files

Usually, every day, data streams, logs, or change-data-capture will generate thousands or millions of tiny “event” files. Although you may attempt to query these little files directly, you should merge small files through a process known as compaction because doing so will eventually have a highly detrimental effect on your performance.

8. Access control and data governance

CISOs, who are understandably wary about “dumping” all of their data into an unstructured repository, have become somewhat familiar with data lakes. This is because it can be challenging to assign permissions based on certain rows, columns, or tables, just like in a database. With the many governance solutions available today to guarantee you have control over who can see which data, this worry is easier to handle. You can provide access control for data and metadata saved in S3 using the newly released Lake Formation feature in the Amazon cloud. Tokenization is another way to protect sensitive data and personally identifiable information.

Technologies and Tools for Data Processing and Integration

1. Tools for ETL

ETL tools should be at the top of your list of data integration tools. These help with data extraction from several sources, format conversion, and data loading into the data lake.

2. Frameworks for Processing Large Data

Large-scale data processing is accomplished through the use of big data processing frameworks. Including frameworks like Hadoop and Spark. These can process data in both batch and real-time. Making them suitable for a range of workloads.

3. Tools for Data Lake Management

The tools for data lake management, which offer features like data governance, data categorization, and security, come next. They ensure that your data lake doesn’t turn into a data swamp by streamlining your management of it.

4. Tools for Data Analysis and Query

Data lake tools for querying and analyzing data in lakes can include business intelligence tools. It also includes data warehousing services, and SQL and NoSQL databases. They also help you in getting insightful conclusions from data, facilitating improved data-driven decision-making.

5. Artificial Intelligence and Machine Learning Tools

Finally, data can be useful to reveal insights and patterns. These insights are hidden by using machine learning and artificial intelligence models. You may improve decision-making, spur innovation, and make forecasts with greater accuracy with the aid of these data engineering tools.

Data Lakes Use Cases

Any business that uses a lot of data can benefit from data lakes. This is regardless of industry, among the few examples are:

Manufacturing

Businesses may increase operational efficiency and apply predictive maintenance by utilizing data lakes. By gathering data from equipment sensors, issue reports, and repair records, businesses can gain a better understanding of the most frequent reasons for failure and forecast when they will happen. Then, they can modify maintenance plans to enhance availability and lower repair expenses. Data lakes are used by businesses to assess the effectiveness of their production procedures. It is also useful in identifying areas for cost reduction.

Marketing

Marketers gather information about consumers from a variety of sources. Such as social media platforms, email campaigns, display advertising, and third-party sources of market and demographic data. Data lakes can collect all of those sources of data, including real-time feeds from websites and mobile apps. By doing this, marketers can create a far more comprehensive picture of their clientele. This improves customer base segmentation, campaign targeting, and conversion rates. They can track rapidly evolving consumer preferences and determine which marketing initiatives yield the most return on investment.

Supply chain

It can be challenging to identify trends and identify issues when supplier information is across several platforms. Information can be gathered via a data lake platform from external sources including weather forecasts, suppliers, shippers, and internal ordering and warehouse management systems. As a result, businesses are better able to pinpoint the reason for delays, determine when goods need to be ordered, and anticipate possible bottlenecks.

Benefits of Data Lakes

Applications in data science and advanced analytics benefit greatly from data lakes as a foundation. Businesses may manage their operations more effectively and monitor market trends and opportunities with the help of data lakes.

Flexibility in Storing and Processing:

Data lake services are storage systems that perform things that other data storage platforms cannot, such as storing raw, structured, semi-structured, and unstructured data. Companies that use data lakes to manage all types of data, regardless of size or type, save a great deal of time and increase productivity.

Cost-Efficiency and Scalability:

Data lakes enable businesses to gather vast amounts of data from rich media, websites, mobile apps, social media, email, and Internet of Things sensors at a lower cost than warehouses. Furthermore, as data is produced on a minute-by-minute basis, data analytics companies stand to gain a great deal from including a highly scalable data storage platform such as a data lake.

Improving the Consistency and Quality of Data:

By adding data to the lake, data lake tools enhance organizational structure. Data improvement is on a higher degree of consistency and quality in that setting.

Enhanced Insights and Analytics:

Predictive modeling and machine learning are two examples of more sophisticated and intricate data analytics made possible by data lakes, which store granular, native-form data.

Build a Secure and Scalable Data Lake with Our Experts

Major Challenges to Efficient Data Lake Management

Data Quality

One of the major data lake challenges is preserving data quality. Inaccurate analysis is a cause of the existence of “dirty data,” or low-quality data, which can lead to poorly informed business decisions. Thus, maintaining high-quality data is essential in any context that uses data lakes.

Data Governance That Works<

Furthermore, it can be difficult to implement efficient data governance within a data lake. The amount, speed, and diversity of data entering the lake demands a strong governance framework. Furthermore, the lack of such a plan may result in problems with data security, integrity, and usability, which would impede efficient data management.

Privacy and Data Security

Let’s move on to discuss privacy and data security. Ensuring compliance with numerous data protection rules and safeguarding large amounts of sensitive data housed in databases presents a significant problem. Therefore, strict security protocols are unavoidable.

Overseeing the Storage and Recovery of Data

Organizing the storage and retrieval of data is another one of the data lake challenges. It might be challenging to ensure effective data storage and quick retrieval when dealing with a large volume of data kept in a data lake. Furthermore, this has an immediate effect on how quickly data is analyzed and insights are produced.

Recognizing the Context of the Data

Finally, it can be intimidating to comprehend the context of the unprocessed data kept in a data lake. Inaccurate conclusions and actions could result from misinterpreting the data in the absence of the proper context.

Conclusion

Data lakes are an affordable option for businesses and organizations that rely on data since they are adaptable and flexible data storage options. A key step in the data architecture process is the creation of data lakes. Top AI companies in the USA use data lakes as platforms for data science applications. Such as big data analysis, which requires enormous volumes of data to achieve their objectives.

When it comes to various analytics techniques, a data lake is a great tool. Especially when it comes to data mining, machine learning, and predictive modeling. Data lake implementation is quite useful for any company that handles large amounts of native, raw data. Using a data lake, for example, can assist in processing and analyzing various types and sizes of data to feed the optimal business-making decision while preparing data for Amazon Athena.

FAQs

What is the use of data lake storage?

In a data lake, large volumes of data are processed, stored, and secured the data. The data can have a structured, semistructured, or unstructured form of data. It does not care about file sizes and can analyze and store any kind of data in its original format. Visit Google Cloud to find out more about updating your data lake.

What is the data lake storage limit?

Data can be kept in the Data Lake for up to 90 days. The number of XDR licenses determines the overall amount of storage that is in use during that period. Servers and endpoints have their storage pools: There is a daily limit of 20 MB per license (or 1.8 GB per license per 90 days) for the endpoint pool.

Can you store unstructured data in data lakes?

Large volumes of unstructured big data can be stored in data lakes. They operate according to the schema-on-read tenet, which means they lack a preset schema. Streaming data, web apps, Internet of Things devices, and many more can be the data sources.

How is a data lake made?

Several sources of data are gathered, and the original format of the data is transferred into the data lake. By using this method, you can save time while building data structures, schema, and transformations and scale to any size of data.

Is Data Lake safe?

Data protection from cyberattacks is ensured by a collection of processes and procedures known as data lake security. A data lake gathers information from several sources, some of which may include consumer information, sensitive information (such as credit card numbers and test results), and so forth.

Is a framework called a data lake?

The framework or method known as “data lake architecture” is useful for creating a central repository for storing and managing data in its native format, free of preset schemas. All forms of big data, including unstructured, semi-structured, and structured, are supported by data lake architecture.