Table of Contents

Ethics and privacy must be prioritized in AI development as it progresses. Unethical or irresponsible AI threatens to negatively impact individuals and society in ways that could seriously undermine well-being and trust in technology. With proactive management of risks at every stage, AI can positively transform the world responsibly and sustainably. The global AI market is expected to reach $407 billion by 2027, with a compound annual growth rate (CAGR) of 36.2%.

Overall, ethics and privacy are what enable AI to empower people rather than exploit them. By keeping them at the core of development, we can realize the promises of advanced technologies while avoiding potential perils. With a commitment to responsible AI, we can achieve its ethical and beneficial gains.

Are You Concerned About Your User’s Data Privacy?

What is AI Ethics?

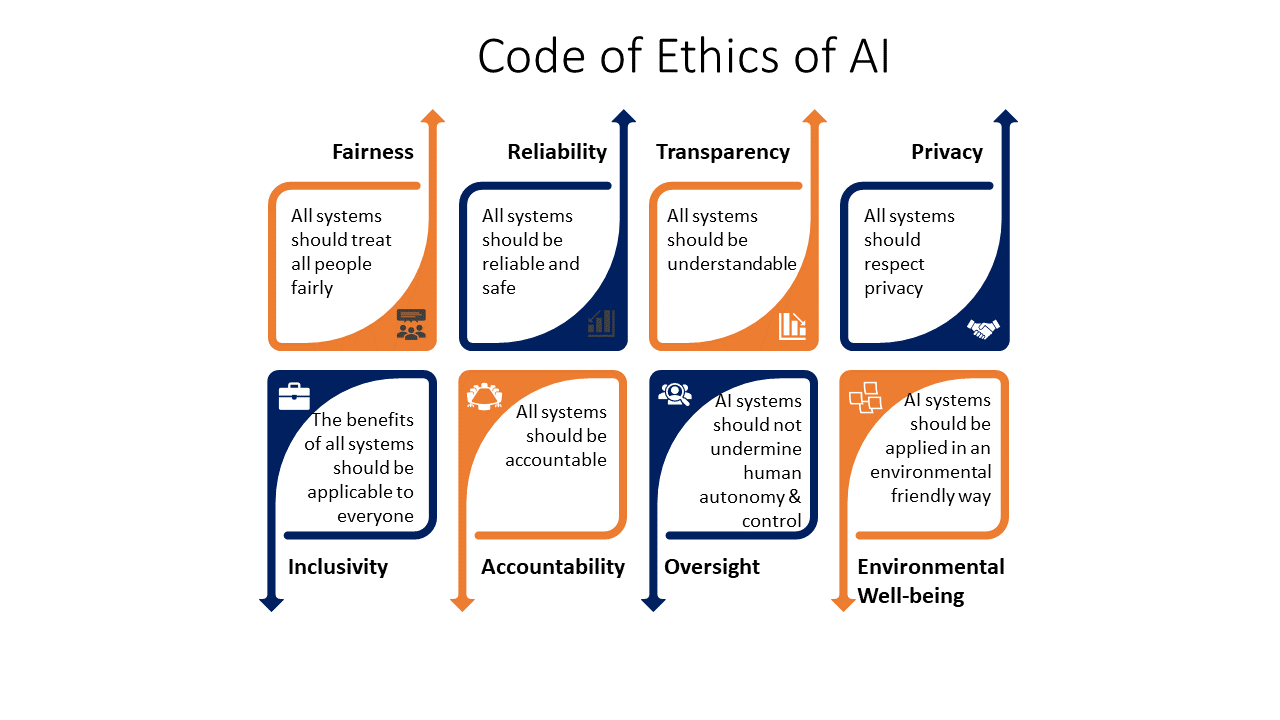

AI ethics refers to studying how artificial intelligence solutions should behave and be designed. It aims to ensure that AI progresses in a way that benefits society and humanity. As organizations are becoming more AI-capable, it is essential to establish guidelines ethical implementation of AI. Researchers need to consider the impact of AI on people’s lives and address issues such as bias, privacy, security, and job disruption.

AI systems must be fair and unbiased. They should not discriminate unfairly against individuals or groups. Data used to train AI models must be representative, and inclusive.AI must respect people’s privacy and only use data with consent. Sensitive information like health records, financial data, and location data need proper safeguards.

An artificial intelligence service must be secure and resilient. They should be robust and not easily hacked or manipulated for malicious purposes like surveillance, manipulation, or fraud. The growth of AI should complement human jobs, not replace them. While automation may displace some jobs, new jobs will also emerge around AI. As per McKinsey ” Existing AI technologies can automate tasks that consume between 60% and 70% of workers’ time today“. Retraining workers and creating new opportunities are essential.

Regulations to ensure AI progress must align with ethics and values. However, too much regulation could slow down innovation. Policies should focus on outcomes, not specific technologies. Individual researchers and engineers are also responsible for building ethical and beneficial AI. They should consider ethics at every step of the design process to maximize the rewards and minimize the harm from AI.

What is AI Privacy?

AI privacy refers to how people’s personal information and data should be protected when AI systems are being developed and used. As AI relies on large amounts of data to analyze patterns and make predictions, privacy issues arise around how that data is collected, used, and shared.

When training AI models, it is essential to use data collected legally and ethically, with proper consent from individuals. Sensitive data like health records, financial information, location history, and personal messages require strict controls and limited access. This data should only be useful for specific purposes. It should not be shared or sold to other parties without permission.

People have a right to know what data about them is being used to inform AI systems. They should be able to request access to their data, understand its use, and correct or delete it if needed.

As artificial intelligence development services are deployed, privacy concerns remain about data aggregation, inferences, and monitoring. For example, AI that analyzes location data or shopping habits to target ads could expose personal attributes or behaviors. Safeguards need to limit how AI uses and shares personal information.

Regulation may be needed to enforce privacy rights, limit data use and sharing, and ensure transparency in AI systems. Laws like GDPR in Europe and CCPA in California aim to give people more control over their data and algorithms. However, the regulation also needs the flexibility to support beneficial AI innovation.

Importance of Ethics and Privacy in AI Development

Ethics and privacy are crucial components of responsible AI. Without them, AI could negatively impact individuals, violate their rights, and damage social trust in technology.

During AI development, ethics should guide critical decisions around how data is collected and used, how algorithms are designed, how systems are deployed, and how people are affected. Researchers must ask hard questions about bias, fairness, inclusiveness, job impact, etc. Policies and guidelines are needed to steer AI progress in a way that maximizes benefits and minimizes harm.

Privacy safeguards must also be built in from the start. How AI systems access and use people’s data, from training to inference to recommendation, should always have proper consent and oversight. Privacy laws allow users to control their information and limit unwanted data use or sharing. An AI developer must respect these laws and privacy rights.

If ethics and privacy are an afterthought, it may be impossible to remedy problems once they emerge. Accountability must be proactively designed into artificial intelligence services and their development process from initial concept to final deployment. Oversight boards, impact assessments, transparency tools, and oversight mechanisms can help achieve this.

Without ethics and privacy, AI also threatens to undermine the essential trust between people and the technologies that increasingly impact their lives. Trust takes time to build and can be quickly destroyed. Keeping ethics and privacy at the forefront helps ensure that AI progresses in an ethical, respectful, and trustworthy manner.

Ethics and privacy should motivate every step of the AI life cycle. They help maximize the rewards and minimize the harm of advanced technologies. Upholding ethics and privacy allows AI to serve the good of individuals and society without creating more anxieties or unintended issues.

Risks of Ignoring Ethics and Privacy in AI Development

When ethics and privacy are overlooked in AI progress, there are significant risks to individuals, society, and humanity. Unethical or irresponsible AI could negatively impact lives, compromise rights, damage trust, and even pose existential threats in some scenarios.

- If AI systems are built and deployed without proper safeguards, they could discriminate unfairly against groups

- They can violate people’s consent and data privacy, spread misinformation, and make critical decisions that significantly disadvantage specific demographics.

- It could undermine justice, inclusion, and equal opportunity.

Lacking oversight and accountability also enables problematic AI uses to be hidden from public view. Surveillance systems could monitor citizens without consent. Manipulative techniques could influence opinions at scale. Autonomous weapons could select and attack targets without meaningful human control. Each of these risks threatens safety, security, and even peace.

When the top artificial intelligence companies and researchers prioritize profit, power, or progress over ethics, they often fail to consider broader impacts. Especially long-term consequences that may not benefit their immediate goals. Unchecked ambition to advance AI for its own sake, without ethical principles, could lead to uncontrolled superintelligence or other existential catastrophic risks. It threatens not just society but civilization and humanity itself.

Regulation and guidelines aim to steer AI development in a responsible direction, but they won’t suffice. A deep commitment to ethics and privacy is a must.

Ethical Considerations in AI Development

Here are some key ethical considerations in AI development:

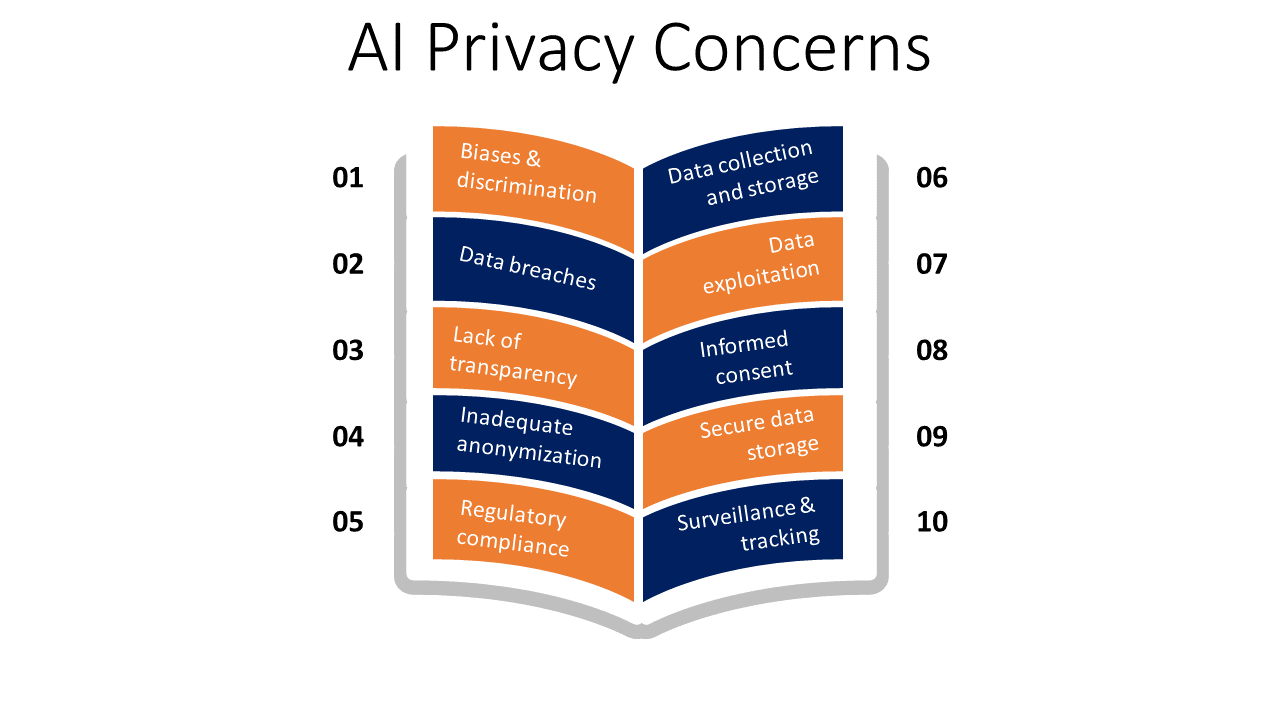

- Bias and unfairness: AI systems can reflect and even exacerbate the biases of their training data and designers.

- Lack of transparency: Complex artificial intelligence services are often opaque and difficult for people to understand, monitor, and oversee. This “black box” problem makes it hard to determine why systems make the decisions or predictions they do.

- Privacy concerns: AI needs large amounts of data to learn to perform human-like tasks. However, this data collection and use raises significant privacy issues that need addressing. It can be through consent, anonymity, secure handling procedures, and more.

- Job disruption and automation: As AI progresses, many jobs may change or be eliminated while new jobs emerge. This displacement could disproportionately impact specific populations and lead to workforce instability. Policies and programs are needed to help people transition to new types of work.

- Manipulation and deception: There are risks that AI could be used to generate synthetic media (deep fakes) for misleading information campaigns or to manipulate people’s emotions, opinions, and behavior at scale. Regulation may be needed to limit malicious uses of generative AI.

- Lack of inclusiveness: Who is involved in developing AI systems and shaping policies will determine whose interests and values are prioritized. More diverse, interdisciplinary teams lead to more ethical and beneficial AI. Underrepresented groups need seats at the table.

- Long-term concerns: Advanced AI could pose existential catastrophic risks that extend far beyond any individual or generation.

Privacy Considerations in AI Development

Here are some key privacy considerations in AI development:

- Collect and use data legally and ethically– Only access information obtained legally with proper consent and for specified purposes. Anonymize or aggregate data when possible. Make data handling policies transparent.

- Limit data access and sharing– Apply the principles of privacy by design to ensure that AI solution providers only access information necessary for their defined objectives. Properly secure data and limit sharing without consent.

- Provide data access, correction, and deletion rights– Enable people to review AI’s access to their data, correct information, or delete details gathered about them upon request. It allows users to maintain control over their information.

- Conduct data privacy impact assessments- Carefully analyze how AI systems may affect people’s data privacy, security, and consent at every development and deployment stage. Anticipate issues and build mitigations. Get independent reviews for high-risk systems.

- Protect sensitive data- Apply additional safeguards to sensitive information like health records, financial data, location history, and personal messages. Limit access and sharing to only essential and consented purposes.

- Provide anonymization and encryption- Anonymize data when possible to prevent reidentification while still enabling AI development and analysis use. Encrypt data and systems to maintain confidentiality, integrity, and availability. Apply the most substantial safeguards for sensitive information.

Guidelines for Ethical AI Development

Here are some guidelines for ethical AI development:

- Conduct impact assessments– Carefully analyze how artificial intelligence services and solutions may affect people, jobs, society, privacy, bias, etc. Anticipate potential issues and put mitigations in place.

- Apply an ethical framework– Use principles like fairness, privacy, inclusiveness, transparency, and accountability to evaluate design choices and policy options. Consider ethical theories like utilitarianism or deontology.

- Put people first– Remember that AI should benefit and empower people, not the other way around. Protect human dignity, rights, well-being, and control over AI and data.

- Build oversight and accountability– Enable mechanisms for monitoring AI systems, auditing outcomes, determining responsibility, and ensuring fixes are applied if issues emerge. Establish independent review boards when needed.

- Enable transparency and explainability– Make AI as comprehensible as needed for people to understand, trust, and oversee it.

- Address bias and unfairness proactively– Carefully audit data, algorithms, and outcomes to avoid unfair discrimination. Promote diversity and inclusive design. Give affected groups representation and a voice in development.

- Obtain informed consent– Be transparent about what data is collected and how AI systems access and use information. Provide choice and control over data and access to systems when possible.

Guidelines for Privacy-Focused AI Development

Here are some guidelines for privacy-focused AI development:

- Obtain informed consent- Be transparent about the way of data collection and development. Provide clear choices for individuals regarding sharing their data or withdrawing consent. Educate on implications and get express permission for sensitive data or high-risk AI.

- Enable strong data access controls- Set granular permissions to determine which people and artificial intelligence services and solutions can access data for specified purposes. Monitor access to ensure conformance. Provide means for data owners to maintain control over their information.

- Consider regulations conscientiously– Follow laws that limit data access, use, and sharing while ensuring fair and beneficial AI progress. GDPR, HIPAA, and CCPA are examples. Regulations provide guardrails but also need the flexibility to support innovation. A balanced approach is best.

- Take a privacy-by-design approach– Build privacy considerations into AI systems and their development. Apply mitigations proactively at every phase to avoid issues rather than reacting to problems once systems launch. Get an independent review for high-risk AI.

- Establish oversight and accountability– Put mechanisms in place for monitoring how AI accesses and uses data, auditing practices, determining responsibility if misuse occurs, and ensuring issues are appropriately addressed and fixed action is taken.

- Educate on privacy responsibility– Help people understand how information about them may be used, how they can protect their privacy in an AI-based world, and why proactive data management is essential for individual well-being and society. Spread knowledge to build trust.

- Share responsibility across stakeholders- Privacy must be a priority for groups, including researchers, an artificial intelligence software development company, policymakers, and everyday technology users. No single entity can ensure ethical AI and privacy alone. However, collective action and oversight can help achieve responsible progress.

Critical considerations when adopting AI technologies

Several important factors must be weighed when determining whether and how to adopt artificial intelligence services and solutions. First, the potential benefits of AI should be evaluated based on objectives and key performance indicators. AI could improve accuracy, speed, scale, personalization, and more. But benefits must justify costs and risks.

Risks and ethical implications also require thorough analysis. Bias, unfairness, job impact, privacy concerns, and lack of control or explainability are some of the significant risks of AI that could negatively impact people and society. Mitigations must be strong enough to make the risks of any system acceptable. Vulnerable groups particularly require protection.

The role of human judgment is important to consider. Human oversight and decision-making may be more appropriate and safe for some use cases than the AI approach, especially as technology progresses. Humans and AI working together in a “human-AI partnership” may achieve the best outcomes in many domains.

Developers must rigorously address privacy, security, and data responsibilities. There must be a policy to ensure information collection is legal and ethical in ways that respect individuals’ consent and preferences regarding their data.

The long-term impacts of AI also warrant contemplation. How might AI influence society, jobs, privacy, autonomy, and more over years and decades rather than months or years? Will AI systems become an inextricable and unwanted part of critical infrastructure or social systems? Careful management will be needed to ensure the changes that result from AI progress are positive and aligned with human values and priorities.

Ensuring Transparency in AI Development

Transparency refers to how understandable, accountable, and explainable AI systems are. Opaque “black box” AI that people cannot understand or oversee threatens to undermine trust, limit accountability, and prevent informed decision-making about adopting and interacting with the technology.

Transparency must be built into AI at every stage by top AI companies in the USA. It is the responsibility of AI researchers and developers to ensure that AI is transparent without bias. They must make efforts to address the risks, if any.

Transparency starts with openly communicating how AI systems work, including limitations and uncertainties in knowledge and capabilities. Being upfront about the fact that AI cannot replicate human traits like common sense, social/emotional intelligence, or general world knowledge helps set proper expectations about the technology.

Access to information must be provided, including details on data, algorithms, computations, and outcomes. Researchers should aim to make their work reproducible while protecting privacy, intellectual property, and security. Auditability is critical to enabling accountability.

Transparent AI provides explainable insights into why systems make the predictions, decisions, or recommendations they do. It could include highlighting the most influential input features, exposing the logical steps in a system’s reasoning process, or identifying anomalies that impact results. The level of detail should suit the user and use case, and an AI development company can help.

A responsibility to disclose risks, limitations, biases, and errors comes with developing any AI technology. If there are opportunities for problems like unfairness, inaccuracy, or manipulation, transparency requires bringing these risks to the attention of those adopting and interacting with the systems. Policies and independent auditing can help achieve this.

Get in touch with the top AI development company for responsible AI development

Ensuring Accountability in AI Development

Accountability refers to individuals, groups, and organizations’ responsibility for their decisions, actions, and systems that could significantly impact people, society, and the environment. Developers must be prepared to determine the reasons for the failure of AI systems. They need to take ownership of issues that arise from the technology and appropriately address them.

Accountability starts with evaluating who would be responsible for any issues that may arise during AI development. Metrics and key performance indicators (KPIs) should be set to evaluate if AI is meeting objectives. Mistake detection, issue tracking, and escalation policies allow developers to address problems before they cause actual harm.

Accountability requires transparency into how AI systems work, the data they analyze, interactions with people, and dependencies in critical systems. “Black box” AI is difficult to hold accountable as the reasons for its behaviors and decisions cannot be adequately determined or explained. Developers must aim for transparency while respecting other priorities like privacy, security, and intellectual property.

If issues emerge, responsibility must be determined to fix problems, compensate for harm, and regain trust in top AI companies in the USA. It includes determining if the algorithm changes, additional oversight, or halting technology use are the most appropriate and ethical responses depending on risks and severity. The willingness to accept responsibility for downsides shows accountability in practice rather than just principle.

Conclusion

In summary, while AI promises to improve our lives meaningfully, we cannot ignore the risks and costs of unethical or irresponsible progress. By prioritizing ethics and privacy at every step, from data collection to design to deployment and beyond, we can ensure AI progresses in a manner worthy of its name: artificial intelligence development that is ethical, inclusive, trustworthy, and beneficial. Overall, responsible AI depends on it.

Frequently Asked Questions (FAQs)

What is privacy and AI ethics?

Privacy and AI ethics refer to ensuring that artificial intelligence progresses and operates in a manner that respects individuals’ rights, dignity, and well-being. It means managing risks related to privacy, security, bias, manipulation, lack of transparency, and data ownership that could undermine trust or compromise people’s agency if mishandled. Here are a few more points on privacy and AI ethics:

- Privacy and AI ethics focus on respecting human dignity and civil liberties as AI progresses with the help of top AI companies in the USA. It preserves what makes us human in the development of intelligent technologies.

- It requires limiting data collection and using only what is necessary and justified. Strict controls are needed over how AI accesses and shares people’s private information.

- Consent and transparency are essential. People must understand how AI systems work, and access data about them. Also, how it uses the information. Secrecy threatens ethics.

- AI Bias and unfairness must be addressed proactively. Privacy and AI ethics promote inclusion and equal treatment, not discrimination. Diversity and oversight help avoid complex algorithms and data.

Why are ethics and privacy important?

Ethics and privacy are critical because, without proper safeguards, AI has the potential for catastrophic harm. Mass surveillance could violate people’s privacy, spread misinformation at scale, make unfair or harmful decisions that disadvantage groups, compromise civil liberties and fundamental human rights, or even pose existential threats to humanity if advanced AI becomes an uncontrolled force. Unethical AI threatens the ethical and social fabric enabling human progress, well-being, and civilization. This makes it essential to take the help of an artificial intelligence service provider.

What does privacy mean in AI?

Privacy in AI means limiting personal data collection, access, and use to only what is legally authentic, strictly necessary, and ethically justified. It allows individuals to access, correct, delete, anonymize, or aggregate their information. Privacy safeguards help ensure AI does not compromise people’s ability to control their data, digital identities, and exposure. It is essential for trust, dignity, equality, and freedom.

What are the ethical issues with AI privacy?

Critical ethical issues with AI privacy include

- bias and unfairness in data and algorithms, which can discriminate against groups.

- lack of transparency into how AI systems access and use people’s information leads to a lack of understanding, oversight, and accountability

- for unauthorized access, sharing, or sale of users’ data

- lack of consent regarding data collection, tracking, and application.

- threats from mass surveillance, manipulation, or insecure systems that can exploit private information

- imbalances of power over people’s data and lives.

[web_stories title=”true” excerpt=”false” author=”false” date=”false” archive_link=”true” archive_link_label=”” circle_size=”150″ sharp_corners=”false” image_alignment=”left” number_of_columns=”1″ number_of_stories=”5″ order=”DESC” orderby=”post_date” view=”carousel” /]