Table of Contents

Generative AI and LLMs are directly changing technology and business. Generative AI, generally speaking, is any system that can generate a lot of diverse, new outputs. Those might include music, images, and synthetic data. This includes when computers create art or can model complex medical situations. Perhaps most commonly, the term “generative AI” triggers the thought of large language models like OpenAI’s ChatGPT. It is correct that large language models comprise a big part of the environment in which generative AI resides, but they represent just part of the story. LLMs focus on generating text that resembles the language used by humans. They may write everything from long reports to emails by using vast amounts of textual data as their learning resource.

Generative AI Market

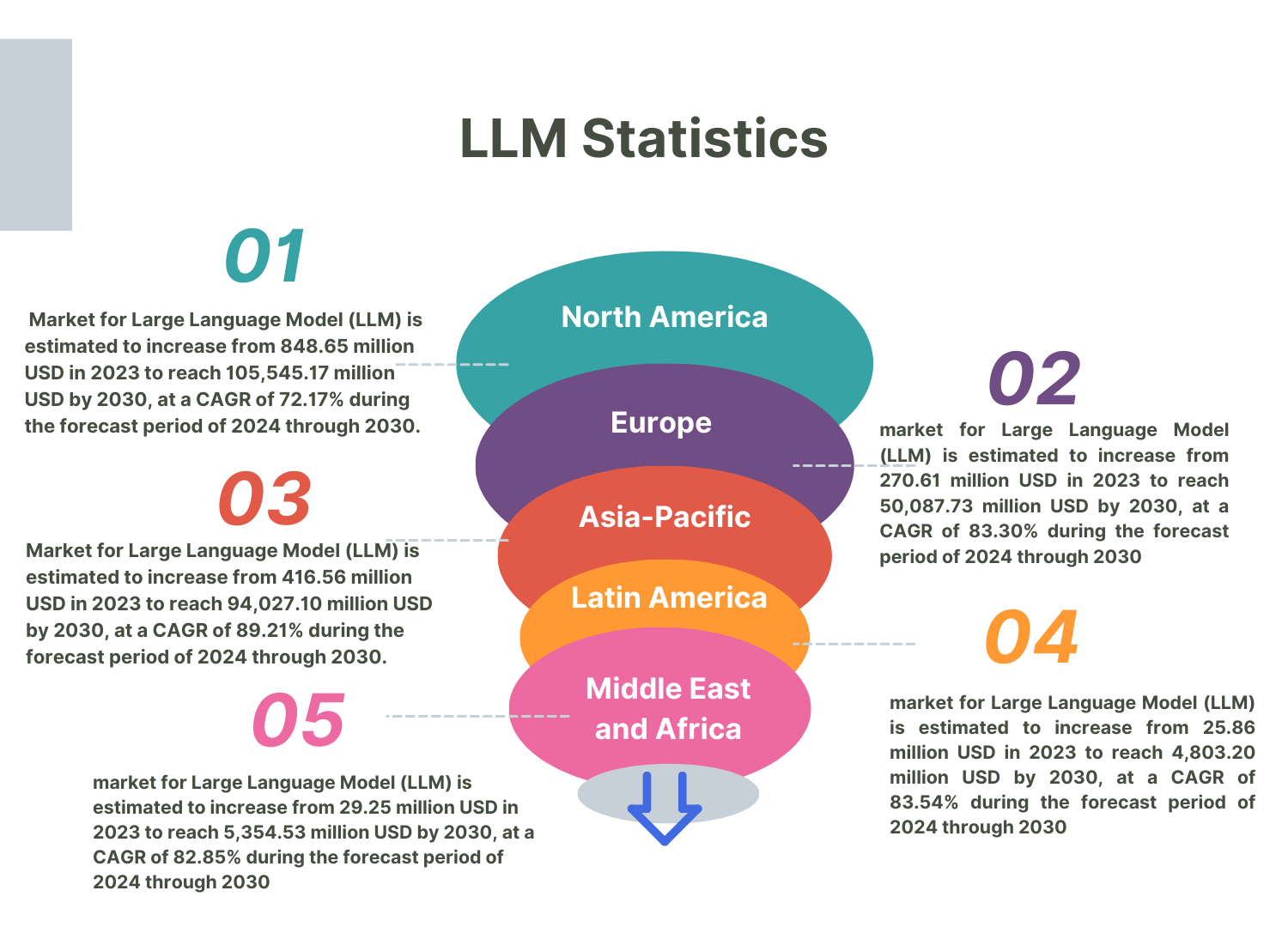

The Generative AI Market is projected to reach USD 356.10 bn by 2030, and the LLM market may be worth USD 40.8 billion by 2029. The capabilities and applications of Generative AI and Large Language Models are going to disrupt a variety of industries. It will disrupt the creative processes of several sectors with the help of new content generation, image generation, and music generation. Most popular LLMs, however, are endowed with an extremely broad body of knowledge that enables them to understand and generate text remarkably close to that of a human. Prominent companies such as Google and OpenAI are spearheading these advancements. OpenAI frequently employs its GPT models for chatbot tasks and content generation, while Google’s BERT model significantly improves language comprehension and search results. Facebook and DeepMind are additional noteworthy contributors.

LLMs are utilized by Facebook for content moderation and translation, while DeepMind is investigating generative AI in projects such as AlphaFold, which predicts protein structures. Despite their similarities, generative AI vs LLM serve distinct purposes and operate on distinct technical principles. In this blog let’s take a deeper dive into the difference between LLM and generative AI and what are their roles.

What is a Large Language Model?

So, what is a large language model? Text generation and categorization, conversational question and answer exchanges, text translation, and other natural language processing (NLP) activities are all handled by large language models (LLMs), which are deep learning algorithms. Large-scale text and code datasets train large language models (LLMs). The quality of these trillion-word datasets affects how well the model performs.

Another crucial factor in most popular LLMs is the quantity of parameters. For instance, there are three versions of Llama, a well-known LLM from Meta: 7B parameters, 13B parameters, and 70B. These parameters represent the memory units that the model picks up during training. These parameters can be thought of as the model’s knowledge foundation. The amount of the parameters increases with the quantity of the training dataset. LLMs require a lot of processing resources to train and operate because they may include billions or even trillions of parameters.

After training an LLM, it can respond to a prompt by rephrasing text, translating, word-complete, or even completing a chat.

Important Features of LLMs

In essence, LLMs are text-based generative language models that produce a response in response to a prompt or query.

Large neural networks known as Massive-scale LLMs usually have hundreds of millions to billions of parameters. They are very expressive because of their enormous size, which enables them to store and handle enormous amounts of information.

The following are the Key features of LLMs:

Preparing and Perfecting

Large-scale internet-sourced text datasets are used to pre-train large language models. Pretraining teaches them to anticipate words in sentences and gives them a general comprehension of language and the outside world. They are adaptive and versatile because they may be fine-tuned for particular activities after pretraining.

Architecture of Transformers

The Transformer design, which excels at managing sequential data, is the foundation of LLMs. The Transformer design makes use of techniques that let the model assess a word’s relative value inside a phrase, thereby capturing intricate connections and relationships.

Understanding Context

A large language model possesses a strong contextual language knowledge. They generate text taking into account the context of a word or phrase, which enables them to provide responses that are both coherent and pertinent to the situation. This comprehension of context plays a key role in their remarkable language creation abilities.

NLP vs LLM

Language translation, text summarization, sentiment analysis, and question answering are just a few of the many NLP activities that LLMs can be used for. When it comes to NLP vs LLM, large language models are useful in a variety of fields and applications because of their versatility and excellent language comprehension.

Together, these qualities make LLM technology an effective instrument for producing and comprehending natural language and facilitating developments in chatbots, content creation, NLP, and other fields. However, because of their size and possible biases in their training data, they also bring up computational and ethical issues.

Generate Creative Content With AI and Explore the Endless Possibilities

What is Generative AI?

The AI field of “generative artificial intelligence” is focused on developing models and algorithms capable of generating new, realistic data similar to the patterns present in the training dataset. It may also be explained as a family of AI systems wherein completely original data gets generated. These systems or models are trained on large datasets, after which they use that knowledge to generate wholly new content. That is why it’s called “generative.”

Generative AI applications run into many thousands and traverse a very wide range of industries, from computing to music creation, art, picture synthesis, and natural language production. While the bulk of traditional AI systems, such as discriminative AI, are made to label or classify pre-existing data, generative AI tools are designed to create wholly new things that have never been witnessed.

Generative AI Model Types

Let us delve into the different types of generative AI models that are taking technology further into the future as we burrow ourselves deeper into this rather exciting field.

Wide-ranging opportunities presented by generative AI models open new avenues for creative applications in a wide range of sectors. By knowing the different types, we can realize the unique features that each type of generative AI offers and harness them to make new solutions.

Generative Adversarial Networks:

GANs consist of two neural networks

- A discriminator

- A generator

While a discriminator is used to check on the authenticity of the generated samples, a Generator generates Neyman-Pearson Lemma realistic data samples. Such networks improve through constant competition and, therefore, output productions that come out incredibly lifelike—ranging from pictures and videos down to three-dimensional models.

Variational Autoencoders

Another popular form of generative AI applications is Variational Autoencoders. An encoder compresses the incoming data into a lower-dimensional space by identifying its key components. Then, a decoder reconstructs from the compressed form the original data to generate new samples that have properties in common with the original data. They generate text, music, and images quite frequently.

Recurrent Neural Networks

Because recurrent neural networks are so good at processing sequential data, they become perfectly fitted to tasks like speech recognition, natural language processing, and time series analysis. Due to the special ability to remember past inputs, RNNs would then be able to produce outputs that enable temporal dependencies and context.

Transformer Models

Models based on transformers have recently received wide attention due to their high effectiveness in solving a wide range of problems related to natural language processing. Since they are based on self-attention techniques, models of this nature can capture complex correlations within input data. Such transformer models have applications in chatbots, content creation, and translation, proving very effective at producing top-quality material, as has been realized with GPT-3.

Generative AI vs Large Language Models (LLMs)

When generative AI vs LLMs are compared, three key differences become evident.

1. While not all generative AI tools are based on LLMs, generative AI is a type of LLM

Any artificial intelligence capable of producing original content falls under the wide area of generative AI. Large language models (LLMs) are among the foundational AI models upon which generative AI tools are constructed. Generative AI’s text-generating component is called an LLM.

2. LLMs produce solely textual outputs

LLMs can only produce text outputs; in the past, they could only take text inputs. In 2022, OpenAI initially published ChatGPT. This was based on the text-only chatGPT LLM. However, with the advent of “multimodal LLMs“, these LLMs can now take in inputs such as audio, images, etc. GPT-4, the upcoming version from OpenAI, is an illustration of a multimodal LLM. Though in distinct ways, generative AI vs LLMs will both change the industry.

Generative AI could alter the way we generate visual output, model 3D objects, and produce voice assistants and other audio. Despite having other important applications and perhaps contributing to broader generative AI choices such as voice assistants, LLMs will primarily concentrate on text-based content production.

3. LLMs are still expanding.

Although LLMs have existed since the early 2010s, they became more well-known with the introduction of potent generative AI tools like chatGPT LLM and Google’s Bard. Everest Group says that the increase of large language models’ parameters—GPT-4 has more than 175 billion parameters—is one reason 2023 saw such exponential growth.

Let’s take a look at various other differences between generative AI and LLM.

| Factor | Generative AI | Large Language Models |

|---|---|---|

| Type of Content | Generates diverse content (text, images, music, code) | Tailored for text-based tasks (text generation, translation, analysis) |

| Data Availability | Requires diverse datasets specific to content type | Optimized for extensive text data |

| Task Complexity | Suited for complex, creative content generation | Excels in language understanding and text generation tasks |

| Model Size and Resources | Demands significant computational resources and storage | May be more efficient for text-focused tasks |

| Training Data Quality | Needs high-quality, diverse training data for meaningful outputs | Relies on a large, clean text structure |

| Application Domain | Creative fields (art, music, content creation) | Natural language processing (chatbots, summarization, translation) |

| Development Expertise | Requires expertise in machine learning and domain-specific knowledge | Pre-trained models are more accessible and user-friendly |

| Ethical and Privacy Considerations | Must consider ethical implications, especially with sensitive content | Often fine-tuned to adhere to specific ethical guidelines |

Use Cases for LLMs

Let’s take a look at the various LLM use cases:

Improved Chatbots: A large language model can improve chatbot functionality to enable more sophisticated customer service and engagement.

Deeper Sentiment examination: They explore the text’s emotional undertones to support an in-depth examination of the feedback.

Translation and Localization: LLMs modify content while preserving its linguistic and cultural significance for many languages and cultural contexts.

Financial Analysis: LLMs are useful in finance to automate document analysis, detect fraud, and assess sentiment.

Medical Diagnosis and Research: By analyzing scientific material, they assist in medical research and diagnose illnesses.

Legal Document Analysis: To save time and money, a large language model assists with the analysis of legal papers by highlighting important sections and pertinent details.

We Have The Expertise to Improve your Language Models

Use Cases for Generative AI

Let’s take a look at various generative AI business use cases

- Design: Generative AI transforms graphic design and video marketing by producing lifelike visuals, animations, and sounds.

- Automating Workflows: Particularly in the creative industries, it reduces repetitive chores and streamlines project workflows.

- Company Strategy Development: Using generative AI, operational roadmaps and company plans may be developed more intelligently.

- Worker Augmentation: Improves an employee’s ability to compose, edit, and arrange text, pictures, and other types of material.

- Healthcare Innovations: Drug development, illness progression prediction, and other medical advances are greatly aided by the use of generative AI.

- Architectural and engineering design: They provide a variety of options based on predetermined criteria and aid in the generation of design models.

- Procedural Content Generation in Video Games: By creating distinctive, dynamic settings and narratives, Generative AI systems in video games improve player experience.

- Synthetic datasets: These are produced by generative AI, which is essential for training other AI models without requiring a large amount of real-world data.

LLM Platforms

OpenAI’s GPT-3.5/4

Leading the way in the list of large language models, GPT-3.5/4 excels in producing human-like text. This makes it appropriate for a variety of uses, including conversational agents and automated content creation. However, due to its size, the model may occasionally generate results that are irrelevant or factually inaccurate, and it may also be more likely to reinforce biases already in the training set of data.

Google’s BERT

BERT is especially useful for natural language comprehension tasks like sentiment analysis and question answering since it is very good at comprehending the context of words in phrases. Its low emphasis on text production may be a drawback for some applications.

IBM Watson

Well-known for offering a wide range of AI services, IBM Watson an AI LLM excels in language comprehension. It can be used for a variety of tasks, including data analysis and customer support. Despite requiring additional setup time and a mastery of its API, its power comes from its adaptability and ability to integrate into many systems.

RoBERTa

Building on BERT, Facebook’s RoBERTa optimizes its training procedure for enhanced performance on a variety of language tasks. Although it provides improvements in precision and effectiveness, its high processing overhead makes it less usable for people with little resources.

Tools for Generative AI

DeepArt

This generative AI tool creates a variety of artistic styles from photos by using neural networks. While it is very user-friendly and excels at creating artistic content, its specific design for visual art limits its applicability to other types of content generation.

RunwayML

With tools for generating text, images, and videos, RunwayML, one of the generative AI tools, stands out for its versatility and user-friendly interface. It balances capability and ease of use for creators without extensive technical knowledge, but it might not provide additional customization options for people with more experience.

Artbreeder

Artbreeder, with the help of Generative Adversarial Networks, further empowers the user with creativity and control when generating visual art related to image blending and editing. While this is surprisingly easy to work with, it is applied only to visual media and cannot be used on media forms of any other dimension.

Jukebox by OpenAI

Very unlike all music creation tools, it makes up new songs across many genres. Quite usable, it still takes some knowledge of both AI and musical composition, giving a rough feel for the creative possibilities opened by Generative AI in arenas to this day covered by human ingenuity.

LLMs and generative AI: A Dynamic Pair

Now that you know how Gen AI vs LLM functions in several practical contexts, consider this: when combined, they can improve a wide range of applications and open up some fascinating new avenues for research and development. Among them are:

Creation of content

Generative language models can generate unique, contextually relevant creative output in a variety of media, such as writing, music, and images. A large language model that “understands” art history and can produce descriptions and assessments of artwork can improve a generative AI model trained on a dataset of artworks.

Among other industries, e-commerce benefits greatly from this combination of content development. Whatever products your online store offers, technology can create eye-catching marketing imagery and copy that will improve consumer engagement for your business. AI-assisted content can help you attract clients and boost sales more rapidly, whether you post it on your website or social media.

Personalization of Content

Generative AI and LLMs work together to enable you to expertly customize content for specific customers. While generative AI may provide tailored material based on preferences, such as targeted product suggestions, personalized content, and advertisements for potentially interesting products, LLMs can comprehend shopper preferences and provide unique recommendations in response.

Virtual assistants and chatbots

By using generative AI approaches, AI LLMs can improve bots’ and helpers’ conversational skills. LLMs offer context and memory functions, while generative AI makes it possible to create interesting answers. Conversations become more conversational, human, and organic as a result. Once more, in the end, this technological advancement may contribute to higher customer happiness.

Generating multimodal content

Generative AI models that operate with different modalities, including visuals or audio, can be integrated with a large language model. As a result, multimodal content can be produced. For example, the AI system can provide text captions for photos or music for movies. AI systems can provide richer, more immersive content that captures the attention of online prospects and buyers by fusing their skills in language understanding with content development.

Narrative creation and storytelling

Large language model architecture can be used to generate stories and narratives when paired with generative AI. The AI system may produce additional content while adhering to coherence and context, with human writers providing prompts and basic story ideas. This partnership creates opportunities for online shopping that can improve ROI and manage the lifetime of goods and services.

Translation and localization of content

To enhance content translation and localization, generative AI models can be useful in conjunction with LLMs. While generative AI can produce precise translations and localized versions of the content, a large language model architecture is capable of understanding language’s subtleties. This combination improves global communication and content accessibility by enabling more precise, contextually relevant translations in real-time.

Content synopsis

Concise summaries of long-form text can be produced by both generative AI models and large language models. Their advantages are as follows: generative AI can create shortened versions of the text that retain the main ideas, while LLMs are better at evaluating the context and salient features. This guarantees effective information retrieval and enables readers to rapidly understand the core concepts presented in lengthy papers.

Conclusion

Both Generative AI and LLM have unique benefits that are appropriate for various uses in the artificial intelligence space. While the best large language models are particularly good at comprehending context and producing coherent writing, generative AI is better at creating original material and addressing problems. But it’s important to always remember that the true potential for collaboration with large language models and generative AI goes well beyond what that might be now. So combined, there’s every chance they might transform even bigger areas of the economy across automated customer support and personalized recommendation systems, never mind content creation and storytelling. By using LLMs to provide context and Generative AI to provide creativity, AI solution providers will be able to create practical solutions that resonate broadly with people in economically viable ways.

For people looking to learn more about big data tools and technologies, A3Logics provides complete end-to-end projects on generative AI, LLMs, data science, and other related fields. A3Logics, an artificial intelligence solutions company provides downloadable source code and step-by-step tutorials for every project, allowing for practical implementation and hands-on learning. By working on these projects, with a large language model development company enthusiasts and experts can obtain real-world experience and knowledge, enabling them to fully utilize LLMs and generative AI in their projects and activities.

FAQs

What are Generative AI and Large Language Models?

Another class of artificial intelligence models designed to generate new content, be it text, images, music, or even code, is a subset of generative AI that learns patterns from existing data. These models can come up with very diverse outputs that are most of the time hard to distinguish from those generated by human beings.

Large Language Models are a subcategory of generative AI focused on text. They understand, generate, and manipulate human language. For instance, models like GPT-4, BERT, and T5 dominate tasks like text generation, language translation, and summarization.

How are Generative AI and LLMs related concerning content creation?

Generative AI is capable of generating many types of content, from images to music and videos. It achieves this through several technologies generative adversarial networks, variational autoencoders, and recurrent neural networks.

LLMs, however, are specialized for text-based tasks. They use transformer architectures and are trained on vast amounts of text data to understand and generate human-like text. This makes them particularly effective for tasks like natural language understanding, text completion, and conversational AI.

What are the key differences in data requirements for Generative AI and LLMs?

Generative AI requires diverse datasets tailored to the specific type of content being generated. For instance, creating realistic images necessitates large datasets of images, while generating music requires extensive datasets of musical compositions.

LLMs rely on extensive text data for training. They are often trained on vast corpora that include books, articles, websites, and other text sources. The quality and diversity of the text data significantly impact the performance of LLMs.

How do task complexities compare between Generative AI and LLMs?

Generative AI is well-suited for complex, creative tasks that require producing varied and novel outputs. These tasks often involve high levels of creativity and pattern recognition across different content types.

LLMs have excelled in all those tasks that feature activities requiring understanding and generating text sensibly and relevantly. They do best in tasks whose demands are solely to do with interpreting and generating human languages, such as text summarization, language translation, and conversational agents.

What are the resource requirements for Generative AI versus LLMs?

Generative AI models, especially those creating high-quality and diverse outputs, demand significant computational resources and storage. Training these models can be computationally intensive and resource-heavy.

LLMs also require substantial computational resources, particularly during the training phase. However, for text-focused tasks, may be more resource-efficient compared to generative AI models producing non-text content. Pre-trained LLMs can be fine-tuned on specific tasks with relatively less computational effort.