Table of Contents

Google has revealed the release of Gemma, a revolutionary Google new AI model that expands on its library. The Gemma model, which was created to encourage responsible AI development, is evidence of Google’s dedication to ensuring that AI is available to everyone. Let’s examine this new release’s nuances and possible effects on the tech industry. After Google Gemini the release of Gemma, a new family of open language models, Google has once again created headlines in the rapidly changing field of artificial intelligence. Google’s dedication to furthering the science of artificial intelligence is demonstrated by this breakthrough, which follows their recent Google Gemini AI improvements.

Introduction to Large Language Models

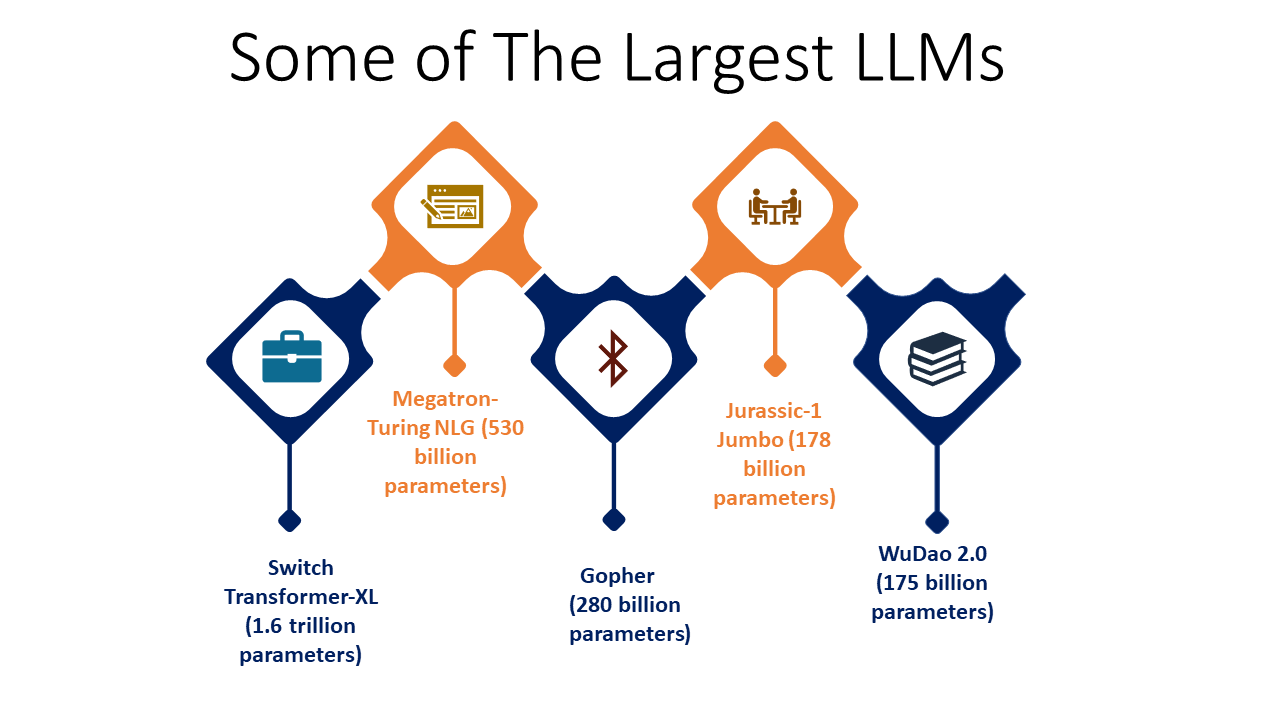

Large-scale human language modeling is an extremely difficult and resource-intensive task. It has taken several decades for AI solution providers to get language models and large language models to their current state of development and it is projected to grow at a compound annual growth rate (CAGR) of 35.9% from 2024 to 2030.

Models become more sophisticated and effective as they get larger. While large language models of today can predict the probability of phrases, paragraphs, or even complete works, early language models could only estimate the chance of a single word.

Over the past few years, as processing power, computer memory, and dataset sizes have increased and more efficient methods for modeling longer text sequences have been created, the size and power of language models has skyrocketed.

There is no set definition but large generally comprises BERT (110M parameters) and PaLM2 (up to 340B parameters). In order to forecast the next token in the sequence, the model uses parameters, which are the weights it learnt during training. “Large” can be the amount of words in the dataset or, in some cases, the number of parameters in the model.

Different kinds of LLM available

There are various types of large language models that are currently being used. The main difference is in the way they are trained and how they are being applied. Among the common types LLM are:

Zero-Shot Model

A large but generalized model that is trained on a wide set of generic data that can give fairly accurate results for general use cases. They often do not require any additional training for use. The GPT-3 model can be an example of the Zero-shot model.

Fine-tuned or Domain Specific models

A zero-shot model when further trained becomes a refined model. Since fine-tuned models are made to address more specialized situations, they tend to be smaller and more subject specialized than their zero-shot counterparts. One example of an improved model that produces code is OpenAI’s Codex, which is more sophisticated than its zero-shot model predecessor, GPT-3. BloombergGPT is a model specifically for financial tasks with a domain specificity to finance.

Edge Model

Although edge models usually have an even smaller scope, they can function similarly to fine-tuned models. This kind of model is frequently made to respond to user interaction by providing instantaneous feedback. An example of an edge model in action is Google Translate.

Multimodal Model

Multimodal Language Models (LLMs) combine text with other types of data, like images, audio, and video, and are meant to manage and give out information across different modalities. GPT-4 is one such example of a multimodal language model.

Introduction to Gemma- Lightweight AI model

The Gemma model is inspired by Gemini, named after the Latin term Gemma meaning precious gem. Developed by Google Deepmind and other teams across Google; Gemma is a family of lightweight. State of the art open models built from the same technology and research that was used to create Gemini.

‘Gemma is responsible by design’, says Google, meaning AI principles were the primary design principle used in the Gemma model and it was designed keeping in mind – responsible AI development. To ensure the safety and dependability of Gemma’s pre-trained models, automated methods were employed to remove sensitive data, including some personal information, from training sets. Furthermore, a great deal of fine-tuning and reinforcement learning from human feedback (RLHF) were employed to match responsible actions with the instruction-tuned models. Robust evaluations, which included automated adversarial testing, manual red-teaming, and model capability assessments for risky operations, were carried out in order to comprehend and lower the risk profile for Gemma models.

Demis Hassabis, co-founder and CEO of Google DeepMind, said: “We have a long history of supporting responsible open source and science, which can drive rapid research progress, so we’re proud to release Gemma: a set of lightweight open models, best-in-class for their size, inspired by the same tech used for Gemini.”

The infrastructure and technological components of Gemma models are shared with Gemini, Google’s most powerful AI model that is currently accessible to the public. In comparison to other open models, sharing its technological and infrastructure with Gemini allows Gemma 2B and Gemma 7B to attain best-in-class performance for their sizes. Additionally, Gemma models is accessible directly on a desktop or laptop computer which the developers use. Gemma meets requirements for responsible AI development and safe outputs while outperforming noticeably larger models on certain benchmarks. Gemma can be fine-tuned to personal data to adapt to a specific application needs like summarization or retrieval augmented generation or RAG. It is capable of supporting a wide variety of tools and systems.

Partner With us to Unlock the Potential of LLM’s For Your Organization

Gemma Vs. Gemini : Exploring the Similarities and Differences

Gemma was designed out of the same infrastructure and technology as Google’s Gemini. Despite having similar functions, each has advantages and disadvantages of its own. Let’s examine some of the two in terms of their parallels and differences:-

Similarities between Gemma and Gemini

Both LLM’s share capabilities in handling various Natural Language Processing tasks. There are a few more similarities between the two-

Type and Development –

Both Gemma model and Gemini are Large Language Models (LLMs) developed by Google. They utilize the same fundamental transformer architecture and their training is on massive datasets of text and code.

Purpose –

The designing of both models is to perform various natural language processing (NLP) tasks like text generation, translation, summarization, and question answering. They excel at understanding and responding to human language in a comprehensive and coherent way.

Underlying Technologies –

Both models share the same core technology, the transformer architecture. This architecture allows them to process and analyze large amounts of text data efficiently, enabling them to generate human-like text and perform other NLP tasks effectively.

Core Functionality –

At their core, both Gemma and Gemini offer similar functionalities in terms of understanding and responding to human language. They can process and generate text, translate languages, answer questions based on their knowledge, and summarize information provided to them.

Gemma Vs Gemini: Differences

Let’s take a look at the differences between Gemma model and Gemini-

| Feature | Gemma | Gemini |

|---|---|---|

| Target audience | Developers and researchers | Businesses and research institutions |

| Size | 2 billion and 7 billion parameters | 1.5 billion, 11 billion, 137 billion, and 1.5 trillion parameters |

| Complexity | Lower | Higher |

| Computational resources | Runs on laptops and desktops | Requires high-performance computing resources |

| Openness | Open-source | Closed-source |

| Focus | Performance and accessibility | Advanced capabilities and power |

| Cost | Free to use | May require licensing or subscription |

Features & Benefits of Gemma

The Gemma model stands out with its impressive performance metrics. It comes in two variants: one with 7 billion parameters and another with 2 billion. Let’s take a look at some of the features and benefits of Gemma-

-

Lightweight Architecture

Gemma is substantially smaller than her larger cousins and is available in two sizes, 2B and 7B specifications. It is therefore perfect for personal computers and even mobile devices, translating to faster inference speeds and lower processing loads.

-

Open Source Availability

Gemma is openly available, unlike many high-performance LLMs, so academics and developers can play around with it, make changes, and help it grow.

-

Instruction-tuned Variants

In addition to pre-trained AI models, Gemma provides “instruction-tuned” versions that are tailored for particular activities such as summarizing and answering questions. Performance and adaptability are improved for practical uses.

Core NLP functionalities

- Text generation: Like many LLMs, Gemma model might be able to generate different types of text formats, including creative content (poems, scripts), informational content (summaries, descriptions), or even conversational responses for chatbots or virtual assistants.

- Translation: Gemma might have the ability to translate between different languages, although the extent and accuracy compared to other LLMs like Gemini are unknown.

- Summarization: It’s likely that Gemma can condense and summarize longer pieces of text, providing concise yet informative overviews.

- Question answering: Gemma might be able to answer factual questions based on its training data, providing helpful information to users.

-

Democratizing Access to AI

Google hopes to democratize access to AI with the Gemma model by offering free usage choices on sites like Colab and Kaggle. Additionally, credits are awarded to new Google Cloud customers, encouraging exploration and creativity within the generative AI development community.

Fine-Tuning and Customization

Gemma’s ability to fine-tune is where its real power rests. Utilize your own datasets to customize the model to your unique requirements and attain performance that is unmatchable. You may fully realize Gemma’s potential by following the comprehensive instructions from the reference pages that are supplied for customization and fine-tuning.

Starting with the Gemma model is an exciting journey. Gemma gives up a world of possibilities for researchers and developers alike with its wide capabilities, friendly community, and approachable attitude. Enter the realm of open-source LLM development now to utilize Gemma’s strength in your upcoming AI project!

Gemma’s influence extends beyond her technical attributes. It encourages creativity and cooperation across the AI field by democratizing access to advanced LLMs. Its potential uses cover a wide range of industries, including scientific research, code production, chatbots, and personal productivity aids. Gemma has the potential to speed natural language processing research and influence AI in the future by reducing access barriers.

Develop LLM and Integrate Them In your Workflow

Applications of Gemma

Because of its open-source nature and technical adaptability, Gemma model is a useful tool for businesses wishing to use AI for a variety of operational objectives. Gemma can handle a lot of data processing and is adept at building chatbots and virtual assistants that improve customer experience. Because of its open-source architecture, which promotes creativity and teamwork, businesses can tailor Gemma models to suit their own requirements. Let’s take a look at some of the applications of Gemma.

Conversational agents and chatbots

The smaller Gemma AI model, Gemma 2B, is perfect for creating conversational bots and virtual assistants since it is optimized for on-device efficiency. Without requiring a lot of processing power, businesses may use these AI-powered agents on embedded systems or mobile devices to improve customer assistance, engagement, and service.

Even though the Gemma model was just released, its features complement the many uses of AI chatbots and virtual agents who help clients. We anticipate direct integrations enabling next-generation conversational interfaces as the Gemma model develops.

Insights and Data Analysis

Large datasets and document analysis are ideally suitable for the more expansive Gemma 7B model due to its increased capabilities for difficult tasks. It can come in handy for businesses that can make use of this large amount of data sets to analyze trends, patterns and gain insights that will help in strategic planning and decision-making.

Content Development and Synopsis

Content creation and summarization, including reports, articles, and marketing materials, can be aided by Gemma’s models. With this skill, producing high-quality content creation is much faster, freeing up organizations to concentrate on strategy and innovation.

Customized Email Marketing and AdWords

Gemma can assist businesses in developing more individualized and successful email marketing campaigns and ad targeting techniques by comprehending and producing genuine language. Increased customer engagement and conversion rates may result from this.

Edge Device Natural Language Processing (NLP)

Because of its optimizations, the Gemma model can be used to conduct NLP jobs directly on edge devices. Real-time business decision-making and integrations, such as in manufacturing, retail, and Internet of Things applications, are now possible by this.

Developers’ Guide to Code Intelligence

By offering natural language interfaces for code editing and development operations, Gemma can increase developer efficiency. Developers can utilize conversational questions, for instance, to obtain code reviews, function descriptions, code recommendations, and assistance with debugging. Gemma would evaluate the meaning and context in order to make pertinent recommendations. This “AI pair programmer” can expedite the creation of AI-powered products, decrease errors, and streamline operations.

Application of Multimodality

Gemma is adaptable for cross-modality use cases because of its capacity to interpret data in the text, audio, and vision domains. Applications that need to engage with consumers in more organic and intuitive ways, like augmented reality (AR) and virtual reality (VR), the combined AR and VR market is currently worth an estimated $32.1 billion, with a projected CAGR of 10.77% through 2028, reaching $58.8 billion will especially benefit from this functionality.

Future of Gemma

Since Gemma model is open source and its improved performance is sparking a significant buzz within the LLM community. But what lies in the future of Gemma?

Improvements to the LLM Landscape:

Gemma’s open-source architecture encourages cooperation and creativity. Its advancement is in areas like interpretability, fairness, and efficiency by researchers and developers globally. Gemma might lead the investigation on multi-modal LLMs, which are able to process and produce audio, video, and images in addition to text.

Optimistic outlook:

Gemma model is a big step toward making AI useful and accessible for everyone with its democratizing approach and remarkable capabilities. We may anticipate many more ground-breaking uses and breakthroughs as development continues. Gemma’s open-source design encourages a thriving community, guaranteeing its continuous development and influence on LLMs in the future.

The collaboration between Google and Nvidia to guarantee the best possible performance of Gemma models on Nvidia chips is a prime example of how crucial cross-industry alliances are to the advancement of AI technology. These partnerships increase the technical prowess of AI platforms while also expanding their industry-wide use. These kinds of collaborations will be vital in determining how AI development is useful in the future.

Automate Tasks, Improve Customer Experience and Gain Insights With LLMs

Conclusion

The entry of the Gemma model into the LLM scene represents a momentous shift. Gemma provides accessibility and flexibility, making advanced AI capabilities available to a wider audience than its larger, more resource-intensive cousins. Because it is open-source, it encourages creativity and teamwork, which advances natural language processing and shapes artificial intelligence going forward.

The introduction of Gemma by Google is a critical turning point in the democratization of AI technologies. In addition to pushing the boundaries of technology, Google is enabling developers and companies to thrive by providing an open-source, laptop-friendly AI platform.

Gemma’s debut demonstrates Google’s role as an artificial intelligence development company, that is offering a transparent, cooperative, and creative tech community. Looking ahead, Gemma has the potential to be a key player in spearheading the subsequent wave of AI-driven innovation and revolutionizing numerous industries worldwide. Platforms like Gemma signify more than simply technical developments in the quickly developing field of artificial intelligence. They also portend the possibility of AI becoming a useful and indispensable aspect of daily life.

A3Logics, a large language model development company provides a doorway to discover the enormous potential that artificial intelligence (AI) possesses, regardless of whether you’re a prospective developer, a tech enthusiast, or a company trying to leverage the power of AI. It’s evident that artificial intelligence has a bright and open future as A3Logics, one of the top artificial intelligence companies , will help you set out on this exciting path.

FAQ

1. What is Gemma?

Gemma is a family of open-source, lightweight large language models (LLMs) developed by Google. It is inspired by and shares some technology with Google’s larger and more powerful LLM, Gemini.

2. Is Gemma an open source?

Gemma is a large language model (LLM) that is available for free. This implies that anyone can view, alter, and play about with the source code and underlying technologies without restriction.

3. How is Gemini different from Gemma?

- Gemma is open source model whereas Gemini is close source

- Gemini requires high-end resources whereas Gemma can function on low-end resources.

- Gemini serves the general audience whereas Gemma’s main focus is on developers and researchers.

4. What sizes does Gemma come in?

Gemma is available in two sizes, 2B and its larger version 7B.

5. What is LLM?

LLM stands for Large Language Model. It refers to a kind of artificial intelligence (AI) program that is given training on large amounts of textual data. This training allows LLMs to perform various tasks related to language understanding and generation