Table of Contents

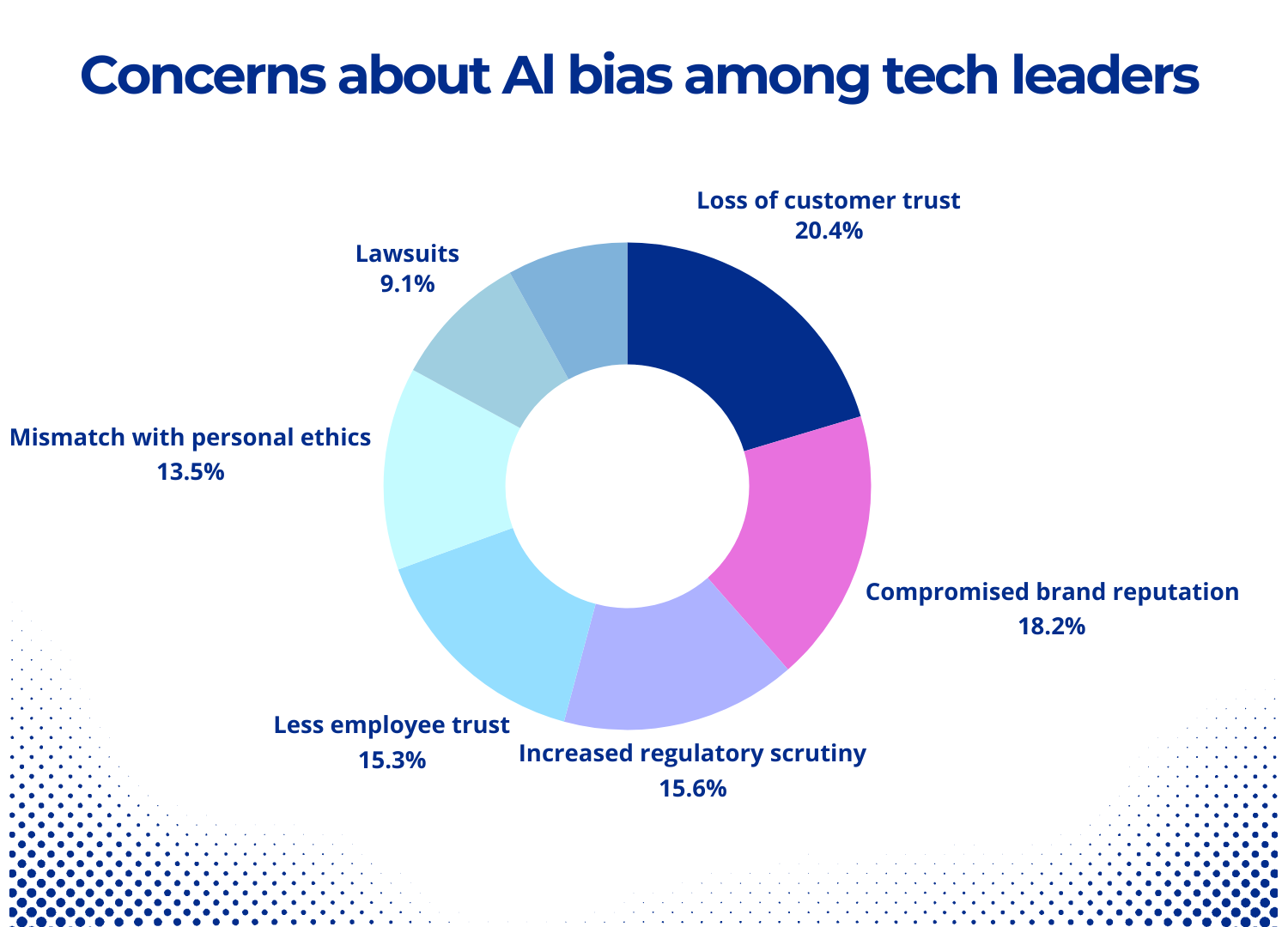

Bias and fairness are trending topics as artificial intelligence is widely in use in delicate fields including hiring, cybersecurity, and healthcare. Human decision-making in these and other areas can also be faulty due to unconscious societal and personal biases. Will AI make fewer biased decisions than people do? Or will these issues get worse because of AI? Human biases are well-documented. Field experiments show how much these biases may affect outcomes, and implicit association tests highlight prejudices we may not even be aware of. In recent years, society has begun to question the extent to which human prejudices can inadvertently find their way. Especially in artificial intelligence development, potentially leading to detrimental outcomes. While AI can assist, detect, and lessen the effects of human biases, AI Bias can potentially exacerbate the issue by introducing and utilizing biases at a large scale in delicate application domains.

Being fully aware of these hazards and making efforts to mitigate them is of utmost importance at a time when many businesses are striving to integrate AI technologies into their operations. For instance, African-American defendants in Broward County, Florida, were mistakenly classified as “high risk” by a criminal justice algorithm almost twice as frequently as white defendants, according to research published by the investigative news site ProPublica. According to another study, gender stereotypes can be exhibited by natural language processing models that are trained on news items.

Underlying data are often the source of bias

The primary cause of AI Bias is typically underlying data rather than the algorithm itself. Data with human decision-making or data reflecting the second-order impacts of historical or societal injustices can be used to train models. Word embeddings, a collection of natural language processing tools, trained on news articles, for instance, might display societal gender prejudices.

The process of gathering or choosing the data for usage can also add AI Bias to the data. According to criminal justice models, oversampling some districts due to over-policing may lead to higher crime rates, which in turn prompts more policing.

User-generated data has the potential to produce biased feedback loops. According to Latanya Sweeney’s research on racial disparities in internet ad targeting, searches for names associated with African Americans typically yielded more advertising with the word “arrest” than searches for names associated with White people. Sweeney postulated that consumers might have clicked on different ad copy versions more frequently for different searches, causing the algorithm to display them more frequently, even though the versions with and without the word “arrest” were initially presented equally.

Additionally, statistical connections that are prohibited by law or socially objectionable may be detected by machine learning solutions. For example, society and legal authorities may view it as unlawful age discrimination if a mortgage lending model determines that older people have a higher chance of defaulting and limits lending based on age.

Take The First Step Towards An Unbiased AI Development

What does artificial intelligence bias mean?

AI Bias, also known as machine learning bias or algorithm bias, describes AI systems that generate biased outcomes that mirror and reinforce human prejudices in a community, including social inequity that exists today and has existed historically. The original training set, the algorithm, or the predictions the algorithm generates can all contain AI Bias.

Bias in AI makes it more difficult for people to engage in society and the economy when it is ignored. It also lessens the potential of AI. Systems that lead to skewed outcomes and encourage mistrust among women, persons with disabilities, people of color, the LGBTQ community, and other oppressed groups of people are not beneficial to businesses.

The cause of AI bias

To remove AI Bias from systems, it is necessary to thoroughly examine datasets, machine learning algorithms, and other components to find any potential sources of bias.

Training Data bias

Because AI systems rely on training data to make choices, it is critical to evaluate datasets for bias in AI. Examining data sampling for overrepresented or underrepresented groups in the training set is one approach. When attempting facial recognition for persons of color, for instance, an algorithm trained on training data that over-represents white people may produce errors. In a similar vein, AI algorithms utilized by law enforcement may become biased against Black people if security data collected in largely Black regions contains information. Labeling of the training data might potentially lead to bias. Qualified job applications could be left out if AI recruiting systems, for instance, use inconsistent labeling, ignore certain attributes, or overrepresent others.

Algorithmic Bias

When faulty training data is used, algorithms may generate unfair results, errors more frequently, or even amplify the Algorithm bias present in the faulty data. Programming mistakes can also result in algorithmic bias. For example, a developer may unfairly weigh certain aspects in the algorithm’s decision-making process depending on their own conscious or unconscious prejudices. For instance, the algorithm may mistakenly bias against persons of a particular race or gender based on characteristics like vocabulary or money.

Cognitive Bias

People’s experiences and tastes always have an impact on how they process information and form opinions. As a result, when choosing which data to use or how to weigh it, humans may introduce these biases into AI systems. Cognitive bias, for instance, may cause datasets from Americans to be preferable over samples from a variety of global groups.

This source of bias in AI is more widespread than you might imagine, according to NIST. In its report Towards a Standard for Identifying and Managing Bias in Artificial Intelligence (NIST Special Publication 1270) “human and systemic institutional and societal factors are significant sources of AI bias as well and are currently overlooked. Successfully meeting this challenge will require taking all forms of bias into account. This means expanding our perspective beyond the machine learning pipeline to recognize and investigate how this technology is both created within and impacts our society.”

Examples of real-world AI bias

1. The discrimination of women against men by Amazon’s algorithm

One of the most prevalent settings in which AI Bias appears in contemporary life is the workplace. Though there has been progress in the last few decades, women remain underrepresented in STEM fields (science, technology, engineering, and mathematics) professions. For instance, women made up fewer than 25% of technical occupations in 2020, according to Deloitte.

Amazon’s automated hiring process which assesses candidates according to their fit for different positions, didn’t help with that. The training of the algorithm was to determine an applicant’s suitability for a position by examining the resumes of past applicants. Sadly, in the process, AI bias was developed toward women.

Because women were historically underrepresented in technical professions, the AI system believed that applications from men were deliberately chosen. As such, resumes from female candidates who had a lower grade were punished. It was expected that Amazon would eventually abandon the project in 2017, even though they had made modifications.

2. COMPAS racial prejudice and recidivism rates

Artificial intelligence can reflect bias in other areas as well, not only gender. There are also numerous instances of racial bias in AI.

The probability of reoffending by US convicts was forecasted by the Correctional Offender Management Profiling for Alternative Sanctions (COMPAS). ProPublica looked into COMPAS in 2016 and discovered that compared to white defendants, black defendants were much more likely to have the system indicate they were in danger of reoffending.

Although it accurately estimated that approximately 60% of both Black and White defendants would re-offend, COMPAS:

- Almost twice as many black defendants (45%) than white defendants (23%) were incorrectly labeled as being at higher risk.

- Incorrectly classified as low risk more white defendants than black defendants, with 48% of White defendants and 28% of black defendants going on to commit new crimes.

- 77% more often than white defendants, black defendants were categorized as greater risk When all other factors (such as age, gender, and prior crimes) were taken into account.

3. The demands of Black patients were undervalued by the US healthcare algorithm

AI has the potential to mirror racial bias in AI in the medical field. This was the case with an algorithm utilized by US hospitals. The system, which was used for over 200 million individuals, was created to identify which patients required additional medical attention. It examined their medical history, assuming that a person’s cost reflects their level of healthcare need.

But that presumption ignored the AI bias in healthcare between White and Black patients. According to a 2019 Science research, black individuals are more likely to pay for proactive interventions, such as emergency room visits, even when they exhibit symptoms of uncontrollable illnesses.

Thus, among black patients:

- Compared to their white colleagues, received lower risk scores

- Were compared in terms of expenses to healthier Caucasian individuals.

- Did not meet the criteria for further treatment as White patients with comparable requirements

4. ChatBot Tay tweets offensive things

Although Elon Musk’s acquisition of Twitter has garnered media attention recently, Microsoft’s attempts to demonstrate a chatbot on the network have generated much more controversy.

They introduced Tay in 2016 to learn from its lighthearted, informal interactions with other app users.

Microsoft first described how “relevant public data” would be “filtered, cleaned, and modeled.” But the chatbot started tweeting racist, transphobic, and antisemitic things within a day. Through its contacts with people, many of whom were feeding it incendiary information, it learned to behave discriminatorily.

Navigate Through The Complexities of AI With Our Expert Guidance

Techniques for Mitigating AI Bias

Keeping AI bias out of models is one of AI’s most difficult problems. Diverse factors might give rise to bias in AI. These can include any human bias that may have inadvertently entered the process as well as the training datasets you used to train your model. Here are a few fundamental rules to follow:

Specify and focus Your AI’s objective.

You must specify and focus your artificial intelligence’s objective to avoid AI bias in your model. You must be specific about the issue you are trying to fix. Next, you must specify exactly what you want your model to do with that data to focus on it.

When you solve too many scenarios, you may require thousands of labels spread over an excessive number of classes. To begin with, identifying an issue precisely will guarantee that your model is operating effectively for the precise purpose for which it was created.

For instance, it’s crucial to specify what precisely qualifies as a “cat” if you’re attempting to determine whether or not an image contains a cat. Does it qualify as a cat if your image is of a white cat on a black background? A black-and-white tabby, how about that? Is it possible for your model to distinguish between these two scenarios?

Gather information in a way that accommodates dissenting viewpoints.

As you gather data, you must make sure you have a complete picture. Obtaining data in a fashion that accommodates differing viewpoints is among the most effective ways to accomplish this.

When developing deep learning algorithms, there are several approaches to characterize or describe a certain event. Take these variations into consideration and include them in your model. You will be able to record more differences in the behavior of the real world as a result.

For instance, let’s say your model is intended to forecast if a specific individual would make a purchase. You may ask your model to categorize individuals according to their occupation, age, and economic bracket. However, what if this result can also be predicted by other factors? For instance, you may mention how long a person has been looking for a new automobile or how many times they have already visited the dealership.

This is when feature engineering becomes crucial. The process of removing features from your data to train the model using them is feature engineering. When deciding which features to include, use caution. AI bias mitigation of your model depends on the characteristics you select.

Recognize your data

Handling AI bias in datasets is a topic that is frequently debated. The greater your knowledge of your data, the less probable it is that offensive labels may inadvertently find their way into your algorithm.

Minimizing bias and AI governance

The first step in recognizing and resolving bias in AI is establishing AI governance, or the capacity to oversee, control, and steer an organization’s AI operations. In actuality, AI governance establishes a set of guidelines, procedures, and frameworks to direct the ethical advancement and application of AI technology. Proper implementation of AI governance by an AI solution provider guarantees that firms, customers, employees, and society at large receive a fair share of the advantages.

Businesses can develop the following procedures by implementing AI governance policies:

Compliance: AI solutions and decisions about AI must be compliant with applicable laws and industry rules.

Trust: Businesses that strive to safeguard consumer data enhance consumer confidence in their brand and are more likely to develop reliable AI systems.

Transparency: Due to AI’s complexity, an algorithm bias may be a “black box” system that provides minimal information about the data that was used to build it. Openness contributes to the fairness of the outcomes and guarantees that the system is constructed using objective facts.

Efficiency: Reducing manual labor and saving employees time is one of the responsible AI principles. The development of AI systems should be with cost-cutting, faster time to market, and goal achievement in mind.

Fairness: Techniques for evaluating inclusiveness, equity, and fairness are frequently included in responsible AI principles. Counterfactual fairness is an approach that detects bias in a model’s judgments and guarantees equitable outcomes even when we modify sensitive qualities like gender, race, or sexual orientation.

Human touch: Systems such as the “human-in-the-loop” system provide choices or suggestions that people then examine before a choice is taken to add a degree of quality control.

Reinforced learning: This unsupervised learning method teaches a system how to do tasks by using rewards and penalties. According to McKinsey, reinforcement learning can produce “previously unimagined solutions and strategies that even seasoned practitioners might never have considered,” as it overcomes human biases.

How societal bias is reflected in AI?

Regretfully, AI is susceptible to the prejudices of humans. If we put a lot of effort into ensuring ethical AI, it can help humans make more objective decisions. AI bias is often caused by the underlying data rather than the method itself. In light of this, the following are some intriguing conclusions from a McKinsey study on combating prejudice in AI:

- – Data from societal or historical inequalities, as well as data from human choices, can be useful to train models. For instance, social gender AI biases may be reflected in word embeddings, a collection of Natural Language Processing algorithms, that were trained on news stories.

- – The method used to collect the data or choose it for usage may introduce algorithm bias. For example, oversampling certain locations in criminal justice AI models may yield more data for crime in that area, which may result in increased enforcement.

- -Data created by users could create a biased feedback loop. It was discovered that when African-American-identifying names were searched, more searches with the term “arrest” appeared than when searches for white-identifying names. Researchers hypothesized that users may have clicked on different versions more frequently for different searches, which is why the system displayed this result more frequently, with or without “arrest.”

- – Machine learning companies can identify statistical relationships that are either illegal or socially undesirable. For example, an advanced mortgage lending model may conclude that elderly borrowers are less creditworthy and more likely to default. Should the model arrive at this conclusion based only on an individual’s age, we may be dealing with unlawful age discrimination.

Here’s another AI bias incident that warrants mentioning: the credit card issue with Apple.

After accepting David Heinemeier Hansson’s application, Apple Card gave him a credit limit that was 20 times higher than Jamie Heinemeier Hansson, his wife. The credit limit granted to Janet Hill, the spouse of Apple co-founder Steve Wozniak, was merely ten percent of her husband’s. It is, of course, improper and unlawful to base a creditworthiness decision on a person’s gender.

Curios About Mitigating The AI Bias

What steps can we take to address AI’s biases?

Here are a few of the suggested fixes for how to prevent AI bias:

1. Recognize the situations where AI can mitigate bias and those where there is a significant chance that AI will make bias worse.

When implementing AI, it’s critical to consider domains for AI bias mitigation that may be vulnerable to unjust bias, such as those that have skewed data or historical examples of biased systems. To understand how and where AI may enhance fairness—as well as where AI systems have fallen short—organizations will need to stay current.

2. Create procedures and guidelines for detecting and reducing bias in AI systems.

Using a variety of instruments and techniques in your AI strategy will be necessary to combat unjust bias. These techniques can identify probable sources of bias and the characteristics of the data that have the greatest impact on the results. Operational tactics can involve employing internal “red teams” or outside parties to audit data and models, as well as enhancing data collecting through more thoughtful sampling. Lastly, openness regarding procedures and measurements can aid in the comprehension of the actions conducted to advance equity and any related compromises by observers.

3. Have fact-based discussions regarding possible prejudices in human judgment.

Leaders should think about whether the proxies that have been employed in the past are sufficient and how AI experts can assist by bringing to light ingrained prejudices that may have gone unreported when AI uncovers more information about human decision-making. When bias is seen in models based on recent human decisions or behavior, businesses should think about future ways to improve human-driven processes.

4. Thoroughly investigate the best ways for humans and machines to collaborate.

This entails weighing the use cases and circumstances in which automated decision-making is appropriate (and prepared for the real world) against those in which human involvement is always necessary. A few methods that show promise combine human and machine learning to minimize AI bias. Methods along these lines include “human-in-the-loop” decision-making, in which algorithms offer suggestions or possibilities, which people then confirm or select. Transparency regarding the algorithm’s confidence in its recommendation in such systems can aid humans in determining how much weight to assign.

5. Increase funding for studies on prejudice, use a multidisciplinary approach, and make more data available for study (while maintaining privacy)

Technical and transdisciplinary research has advanced significantly in recent years, but more funding will be required to continue these efforts. While being mindful of privacy concerns and potential hazards, business leaders can also contribute to development. They can do so by increasing the amount of data available to those working on these issues across enterprises. Interdisciplinary participation from ethicists, social scientists, and specialists who are most knowledgeable about the specifics of each process application area will be necessary to make more progress. As your AI strategy develops and real-world application experience increases, a crucial component of the interdisciplinary approach will be to continuously assess and analyze the impact of artificial intelligence in decision-making.

6. Increase your investment in broadening the AI industry

The diversity of society, including that of gender, color, location, class, and physical disability, is not in AI feed by itself. An AI consulting services company that is more diverse will be better able to engage populations who are likely to be impacted by bias. They will be better able to anticipate, identify, and evaluate cases of unfair bias. Investments are necessary on several fronts, but mostly in AI training opportunities and tool availability.

Will unbiased AI ever exist?

The brevity of the response? Both yes and no. Although it’s unlikely, there may ever be a completely objective AI. This is because it is improbable that there can ever be a completely objective human consciousness. The quality of the data that artificial intelligence systems are fed is what determines how good the system will be. Assume you can remove any conscious and unconscious bias in generative AI related to gender, ethnicity, and other ideologies from your training dataset. In that scenario, you will be able to develop an artificial intelligence system that renders unbiased decisions based on data.

On the other hand, we are aware that this is unlikely in real life. The data that AI consumes is generated by humans. It is therefore possible that neither an AI system nor a completely objective human consciousness will ever be attained. Humans are the ones who create the skewed data, and humans and algorithms created by humans are the ones that validate the data to identify and fix bias in AI. On the other hand, by validating data and algorithms and applying best practices for data collection, data use, and AI algorithm creation, we can counteract AI bias.

Conclusion

We will witness an increasing number of cases where AI bias is perpetuated by technology as AI develops and grows. Increasing awareness, awareness-raising, and education are the greatest ways to combat this. An AI consulting company must first own its prejudices and take them into account when making judgments regarding AI systems. Every human has biases that color the way we perceive the world. Companies must maintain transparency regarding their data sets to facilitate external analysis. These AI consultants could observe patterns or trends that were previously overlooked. We must educate ourselves about how our prejudices impact our daily lives. Also, how if these remain unchecked, they may eventually damage artificial intelligence (AI) systems.

FAQs

How to handle decisions by AI systems if we discover unintentional bias in AI systems?

After all, humans create biased data, and biased detection and removal processes involve both human and human-made algorithms. By validating data and algorithms and creating AI systems with responsible AI principles in mind, we can reduce AI bias.

How can you make sure your AI systems are impartial?

Agencies can reduce bias in new technologies by implementing additional measures like:

- – diverse team composition,

- – extensive user research,

- – a variety of data sources,

- – bias detection and mitigation,

- – bias training,

- -iterative testing and development, and ethics reviews.

How to detect bias in AI?

There are several techniques for identifying bias in AI systems, including data analysis, algorithm analysis, human analysis, and context analysis. Descriptive statistics, data visualization, data sampling, and data quality evaluation are all applicable to data analysis.

Can AI Bias occur when there is no human interaction?

Since people are the ones who generate data, AI can be just as useful as data. Humans practice bias in many ways, and the number of biases grows steadily after the discovery of additional biases. As a result, just as it may not be feasible for a human mind to be entirely objective, neither can an AI system.