Table of Contents

OpenAI, an AI research and development company has revealed on 16th February 2024, a text-to-video model, called Sora which could raise the bar for what’s possible in generative AI. The term “Sora” refers to “sky” in Japanese. It is speculated that they intentionally named it after Tokyo, as suggested by their official release tweet featuring a themed video.

Like Google’s text-to-video device Lumiere, Sora’s availability is limited. Unlike Lumiere, Sora can generate videos as long as 1 minute long.

Text-to-video has become the latest arms race in generative AI as OpenAI, Google, Microsoft, and more look beyond text and image generation and seek to cement their situation in a sector projected to reach $1.3 trillion in revenue by 2032. Consumers who have been fascinated by generative AI development since ChatGPT arrived a little more than a year ago will be influenced by it.

According to a post on Thursday from OpenAI, maker of both ChatGPT and Dall-E, Sora will be available to “red teamers,” or experts in areas like misinformation, bias, and hateful to adversarial test the model. Adversarial testing will be especially important to address the potential for influencing deep fakes which is a major area of concern for the use of AI to create images and videos. Additionally, it would be available to visual artists, designers, and filmmakers to gain additional feedback from creative professionals.

Introduction to the new AI model

Sora, a Generative AI model introduced on February 16, 2024 created and freely announced by OpenAI – – an artificial intelligence research company in February 2024. OpenAI was founded by a group of entrepreneurs and researchers Elon Musk and Sam Altman in 2015. OpenAI is supported by Microsoft, and by several other investors such as Thrive Capital, Micro VC, and many others. It has also created Dall-E and AI text-to-image generators and trained GPT-3.5, and GPT-4 & the latest and most powerful OpenAI model.

Sora is able to generate videos for as long as a minute extended period maintaining visual quality and commitment to the user’s brief. Sora can generate complex scenes with multiple scenarios, specific types of movement, and accurate details of the main subject and the background. The artificial intelligence model understands what the user has prompted, yet additionally how the details exist in the physical world. OpenAI wrote in its announcement. “Sora serves as a foundation for models that can understand and simulate the real world,”

Source: OpenAI

How does Sora work?

Sora is a diffusion AI model that, like ChatGPT, uses the Transformer architecture, introduced by Google researchers in a 2017 paper. “The world is multimodal,” OpenAI COO Brad Lightcap told CNBC in November. “If you think about the way we as humans process the world and engage with the world, we see things, we hear things, we say things — the world is much bigger than text. So to us, it always felt incomplete for text and code to be the single modalities, the single interfaces that we could have to know how powerful these models are and what they can do.”

Sora has so far just been available to a small gathering of safety testers, or “red teamers,”. They test the model for vulnerabilities in areas like misinformation and bias. The company hasn’t released any open demonstrations beyond 10 sample clips available on its website, and it said its accompanying technical paper will be released later.

OpenAI also said it’s building a “detection classifier” that can identify Sora-generated video clips, and that it plans to include certain metadata in the result to help with identifying AI-generated content. Furthermore, it’s the same type of metadata that Meta is hoping to use to identify AI-generated images this election year.

Features Of Sora

Sora, OpenAI’s latest text-to-video model, introduces a suite of innovative features that redefine content creation and storytelling. Overall, here are eight key features that distinguish Sora AI:

1. Dynamic Scene Generation:

– Sora excels in dynamically generating scenes based on textual descriptions. Furthermore, it leverages advanced algorithms to rejuvenate written concepts, enabling a seamless transition from text to visually compelling videos.

2. Multimodal Understanding:

– Unlike traditional models, Sora possesses multimodal understanding, processing text, and image inputs simultaneously. Furthermore, this dual capability enhances its ability to interpret and translate textual descriptions into vibrant visual sequences.

3. Enhanced Creativity:

– Sora goes beyond conventional text-to-video models by incorporating a serious level of creativity. Furthermore, it can generate diverse and imaginative visual content, making it a versatile device for various industries. That also includes entertainment, marketing, and education.

4. Adaptive Learning from Data:

– Through adaptive learning mechanisms, Sora ceaselessly refines its understanding and performance based on the data it processes. Furthermore, this ensures that the model evolves, becoming more adept at capturing nuances in text and translating them into visually engaging sequences.

5. Temporal Coherence:

– Maintaining temporal coherence is critical for video generation. Sora excels in this aspect, ensuring smooth transitions and logical progression in the generated video sequences. This feature enhances the overall viewing experience and makes the content more compelling.

6. Realistic Animation:

– Sora introduces realistic animation to the text-to-video landscape. Furthermore, It can dynamically animate characters, objects, and scenes, adding a layer of realism that enhances the narrative and captivates the audience.

7. Scalability Across Genres:

– One of Sora’s standout features is its scalability across diverse genres. Whether producing instructional videos, commercials, or entertainment pieces, Sora adjusts to the particular needs of the genre, demonstrating its adaptability and scope of use.

8. User-Friendly Interface:

– User-friendliness has been given top priority in Sora’s interface by Open AI ChatGPT, opening it out to a wider audience. The model is intuitive. It allows users to easily include text and receive visually striking video yields without the need for extensive technical expertise.

To sum up, Sora AI is evidence of OpenAI’s dedication to improving text-to-video model capabilities. Sora expands the possibilities for artistic creation with characteristics like adaptive learning, multimodal comprehension, and dynamic scene generation. making it an effective tool for professionals in a variety of industries, including content producers and storytellers.

As the field of AI continues to evolve, Sora represents a significant leap forward in the combination of language and visual arts, opening possibilities for more engaging and immersive content experiences.

How Sora is different from other AI models

OpenAI developed two specific machine learning models, Sora AI and ChatGPT, each serving unique purposes and showcasing specific capabilities. Here are the key differences between Sora AI and ChatGPT:

| Aspect | Sora AI | ChatGPT |

|---|---|---|

| Purpose and Functionality: | Sora was primarily created for text-to-video production, and it is quite good at turning text descriptions into animated and visually appealing videos. Its main objective is to improve content creation’s creative elements. | ChatGPT was created for natural language generation and understanding. It can have conversations and offer well-reasoned answers. It functions as a conversational agent that can comprehend and produce writing that is similar to that of a human being on a variety of subjects. |

| Output Medium: | Generates video yield, making it suitable for applications in visual storytelling, multimedia content creation, and dynamic scene generation. | Produces text-based responses and is tailored for chat-based interactions, text-based queries, and generating written content. |

| Multimodal Capabilities: | Possesses multimodal understanding by processing text and image inputs simultaneously. This enables it to create visually appealing videos based on textual descriptions. | Primarily focuses on text-based interactions and does not inherently process or generate visual content. It excels in understanding and generating written language.. |

| Creativity and Animation: | Emphasizes creativity and realistic animation, carrying an elevated degree of dynamism to the generated video content. This design caters to a wide range of creative applications. | While capable of generating creative text, ChatGPT does not inherently include features for dynamic animation or visual content creation. |

| Temporal Coherence: | Maintains temporal coherence in video sequences, ensuring smooth transitions and logical progression in the generated content. | Focuses on coherence in textual responses during conversational interactions and does not inherently address temporal coherence in a visual context. |

| User Interface and Accessibility: | Prioritizes a user-friendly interface, making it accessible to a broader audience. Users find it intuitive, allowing for easy text contribution and visually striking video generation. | Typically interfaces with users through text-based data sources and results, giving a straightforward conversational experience. |

When Will Sora be accessible to the public?

Security researchers are currently putting Sora through serious hardships to ensure its safety and security before releasing it to the general society and assessing “critical dangers.”

Artists, filmmakers, and designers, including OpenAI, have been given access to Sora. (In this version, OpenAI is no longer speaking passively about itself.)

Some in-the-loop accounts on the OpenAI gathering seem to signal that there will be a waiting rundown rolled out at some point, which will be the main chance to get your hands on it.

Unfortunately, there is no sign of when we’ll be able to join Sora. Furthermore, all the content that’s been becoming a web sensation on the internet over the past hours has come out of the announcement blog entry published by OpenAI.

Interestingly, it doesn’t seem like AI application has even given a vague indication of when it very well may be made generally available – there’s not even been an indication that it will be released for the current year.

That’s quite unusual for such a large announcement, and could suggest it’s far off open release – however at that point again, OpenAI does admit that it’s sharing its research early. With the speed that the artificial intelligence industry has moved over the past two years, the true launch date is anyone’s guess.

Risks associated with Sora, a New AI model

The presentation of the Sora model by OpenAI raises serious concerns about its potential misuse in generating harmful content, including but not limited to

1. Creation of Pornographic Content:

Sora’s ability to generate realistic and excellent videos based on textual prompt engineering techniques may pose a gamble in the creation of explicit or pornographic material. Furthermore, malicious users could leverage the model to produce inappropriate, exploitative, and harmful content.

2. Propagation of Fake News and Misinformation:

Someone can misuse Sora’s text-to-video capabilities to create persuasive fake news or misinformation. For example, the model could generate realistic-looking videos of political leaders making false statements, spreading misinformation, and potentially harming public perception and trust.

Creation of Content Endangering General Health Measures:

Sora’s ability to generate videos based on optimized prompts raises concerns about creating misleading content related to general health measures. Furthermore, malicious actors could use the model to create videos discouraging vaccination, advancing false cures, or undermining general health guidelines, jeopardizing public safety.

Struggling to Give The Correct Prompts for Effective Utilization of Generative AI

4. Potential for Disharmony and Social Unrest:

Someone may exploit the realistic nature of videos generated by Sora to create content that blends disharmony and social unrest. For instance, the model could generate fake videos depicting anything misleading or against morality like false violence, discrimination, or unrest incidents, resulting in tensions and potential real-world consequences.

Furthermore, OpenAI recognizes the potential for misuse and is taking steps to address safety concerns.

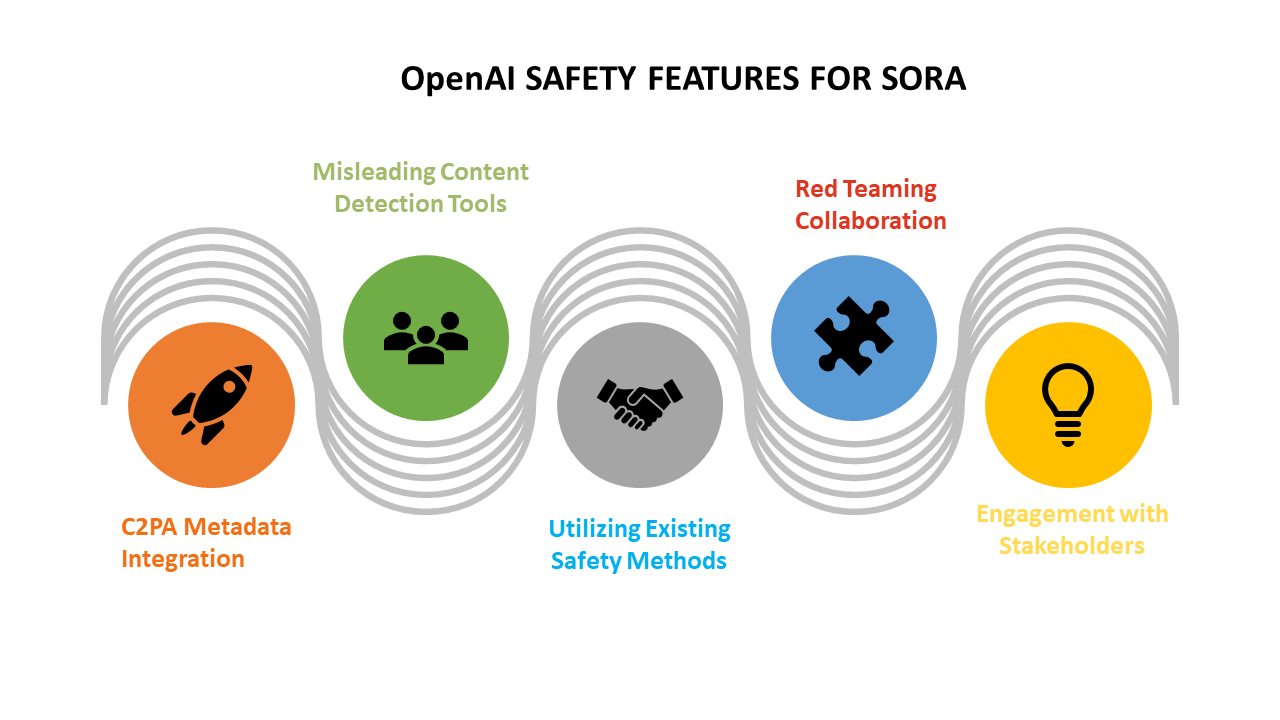

OpenAI’s Safety Measure for Sora Model

OpenAI is implementing several crucial safety measures preceding the release of the Sora model in their items. Key focuses include:

1. Red Teamers Collaboration

OpenAI is collaborating with red teamers, and experts in domains like misinformation, hateful content, and bias.

Furthermore, These experts will direct adversarial testing to evaluate the model’s robustness and identify potential dangers.

2. Misleading Content Detection Tools

OpenAI is developing tools, including a detection classifier, to identify misleading content generated by Sora.

The goal is to enhance content examination and maintain transparency in distinguishing between AI-generated and authentic content.

3. C2PA Metadata Integration

OpenAI plans to include C2PA metadata in the future deployment of the model inside their items.

The Sora model generates the video, and this metadata serves as an additional layer of information to indicate it.

4. Using Existing Safety Methods

OpenAI is leveraging safety methods already established for items utilizing DALL·E 3, which are relevant to Sora.

Techniques include a text classifier to reject prompts violating usage policies and image classifiers to review generated video frames for strategy adherence.

5. Engagement with Stakeholders

OpenAI will engage with policymakers, educators, and artists globally to understand concerns and identify positive use cases.

The aim is to gather diverse perspectives and feedback to advise responsible deployment and usage regarding the technology.

6. Real-world Learning Approach

Despite extensive research and testing, OpenAI acknowledges the unpredictability of technology use.

Learning from real-world use is deemed essential for continually enhancing the safety of AI systems over time.

Moreover, the collaboration with external experts, implementing filters, and adding AI-generated metadata to flagged videos. However, the gamble remains that Sora could contribute to the proliferation of harmful content, emphasizing the need for responsible use and continuous checking of its deployment in various contexts.

Want to develop an AI model like Sora?

To Conclude

In a nutshell, Sora, a diffusion model generates videos by transforming static noise gradually. It can generate entire videos at once, extend existing videos, and maintain subject continuity even during temporary out-of-view instances. Similar to GPT models, Sora employs a transformer architecture for superior scaling performance.

Videos and images are represented as patches, allowing dissemination transformers to be trained on a wider range of visual data, including varying durations, resolutions, and aspect ratios. Furthermore, building on DALL·E and GPT research, Sora incorporates the recaptioning technique from DALL·E 3, enhancing commitment to user text directions in generated videos.

The model can create videos from text directions, animate still images accurately, and extend existing videos by filling in missing frames. Sora is seen as a foundational step towards achieving Artificial General Intelligence (AGI) by understanding and simulating the real world.

Connect with the best artificial intelligence development company to understand the impact these models could have on businesses.

[web_stories title=”true” excerpt=”false” author=”false” date=”false” archive_link=”true” archive_link_label=”” circle_size=”150″ sharp_corners=”false” image_alignment=”left” number_of_columns=”1″ number_of_stories=”5″ order=”DESC” orderby=”post_date” view=”carousel” /]